Weekly Recap (January 23, 2026)

AIxBio, Claude's Constitution, UK AI+Science, exams with chatbots, R updates (Posit, R Data Scientist, R Weekly), reading what Adam Kucharski is reading, AI vs reality at Salesforce, papers+preprints.

In 2026 I’ll be writing somewhat regularly about the convergence of artificial intelligence with biotechnology (AIxBio) and biosecurity (see the biosecurity tag here). Meanwhile, my friend and colleague Alexander Titus has been on a roll over on his newsletter, The Connected Ideas Project, writing on a similar theme in recent weeks. See his latest essay, a vignette, AI × Bio and the Vanishing Middle: Accelerationists vs. catastrophists in the decade defining convergence.

This week Anthropic released the Claude Constitution, a detailed description of their vision for Claude’s behavior and values, used directly in their training process. The full constitution document is available at anthropic.com/constitution. It’s 84 pages, released as CC0 (public domain). PDF below. Claude’s constitution came out yesterday. It’s long, 84 pages printed. Lawfare’s legal analysis is interesting. ...And interestingly, Simon Willison points out that two of the contributors are Catholic members of the clergy - Father Brendan McGuire is a pastor in Los Altos with a MS in CS and math, and Bishop Paul Tighe is an Irish Catholic bishop with a background in moral theology.

Irus Braverman at Aeon: Red tape on a blue planet. Do the laws and rules that once protected coral reefs now stand in the way of radical interventions that could save them?

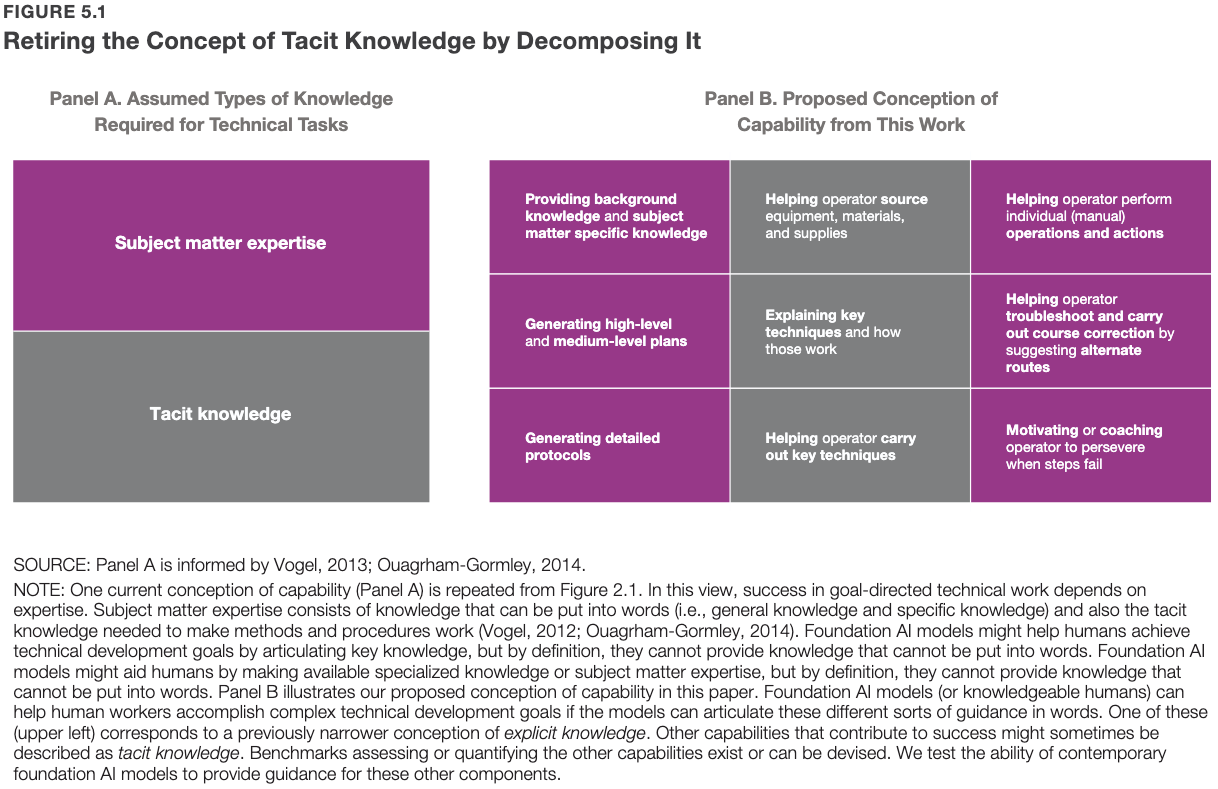

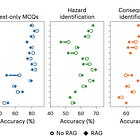

RAND: Contemporary Foundation AI Models Increase Biological Weapons Risk.

The R Data Scientist 2026-01-21: Community & Roundups, Pharma R Ecosystem, R Tooling & Workflow, Spatial & Visualisation, Inference & Bayesian, Academic Research.

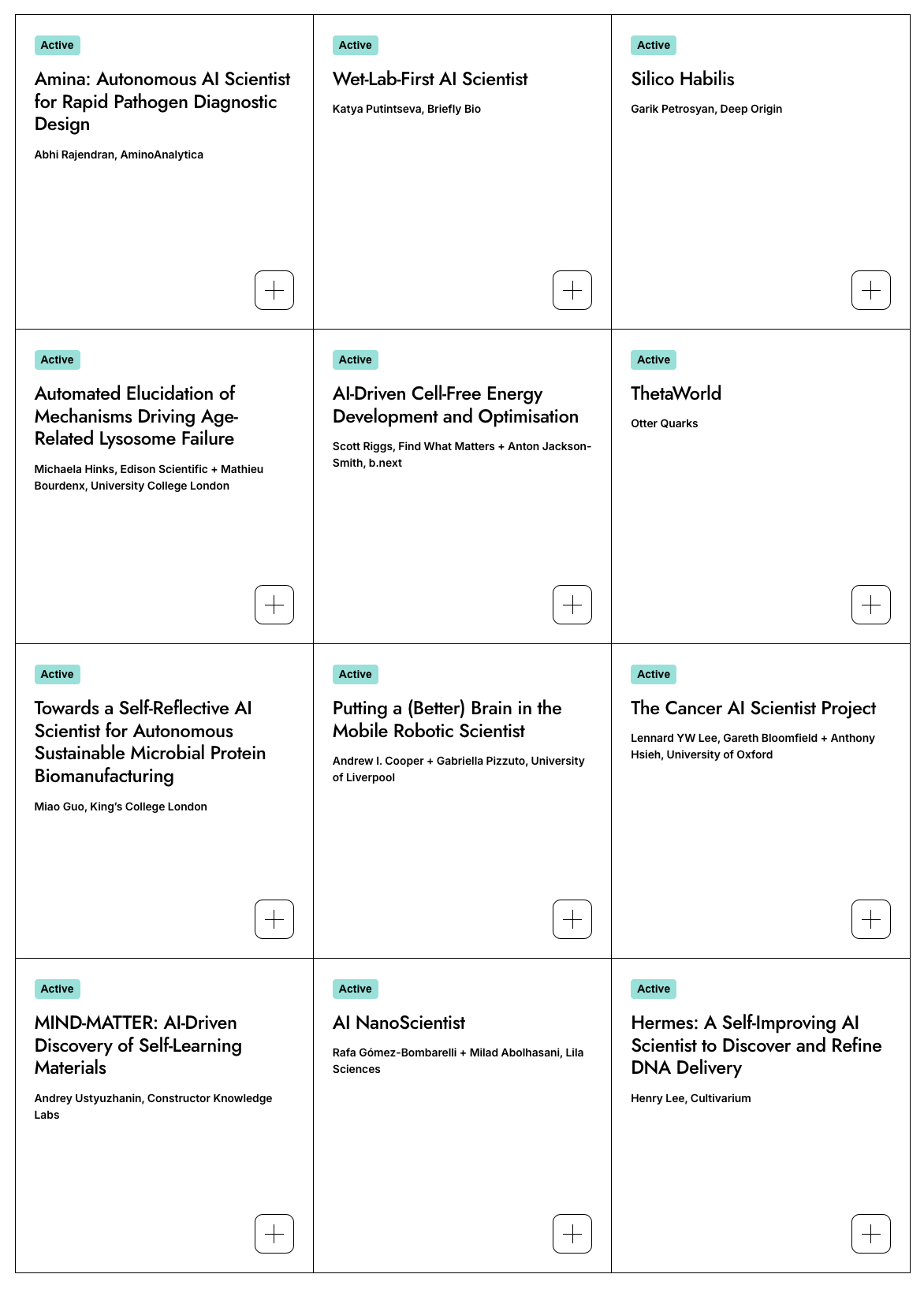

The UK government is backing AI that can run its own lab experiments. See the list of funded projects.

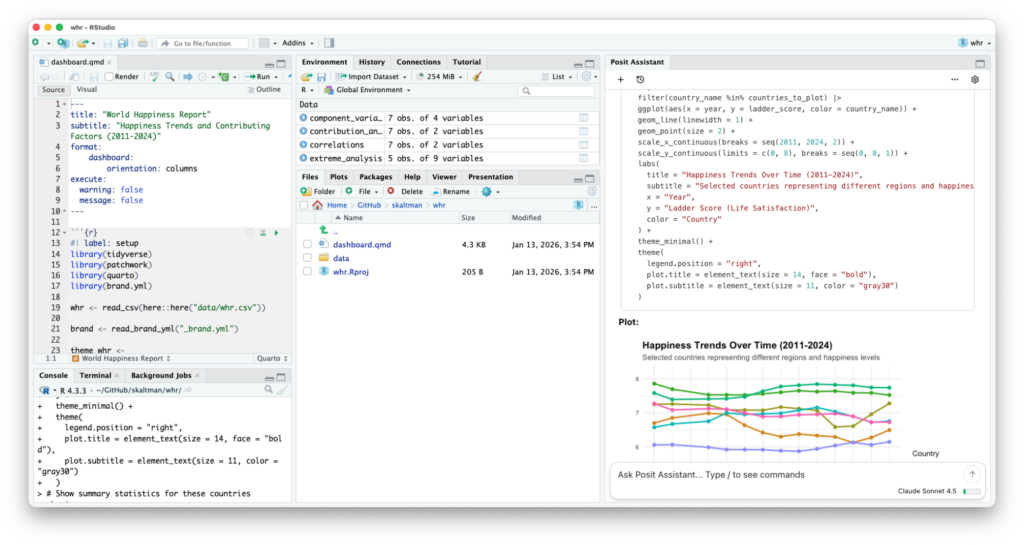

2025 Posit Open Source Year in Review.

R Weekly 2026-W04: Automate PRs with Claude, Accessible line charts, more.

Ploum (Lionel Dricot): Giving University Exams in the Age of Chatbots.

NYT (gift link): Chinese Universities Surge in Global Rankings as U.S. Schools Slip. Harvard still dominates, though it fell to No. 3 on a list measuring academic output. Other American universities are falling farther behind their global peers.

Isabel Zimmerman & Davis Vaughan: Exploring Positron settings.

AI has supercharged scientists—but may have shrunk science. This is a commentary column covering the Nature paper linked below, Artificial intelligence tools expand scientists’ impact but contract science’s focus.

These results generally highlight an emerging conflict between individual and collective incentives to adopt AI in science, where scientists receive expanded personal reach and impact, but the knowledge extent of entire scientific fields tends to shrink and focus attention on a subset of topical areas.

I wrote a little more about this here.

China’s ‘Dr. Frankenstein’ Thinks Time Is on His Side. Interesting article on He Jiankui and gene editing human embryos.

Simon Couch, Sara Altman: Posit AI Newsletter 2026-01-16. Claude Code + Opus 4.5, Organizational values and AI, terminology, AI agent coming to RStudio.

Adam Kucharski: Things I’ve been reading this past week or so. A few that I picked up on from Adam’s newsletter:

Will Lockett: Reality Is Breaking The “AI Revolution.” Long block quote ahead.

Back in September, Benioff announced that Salesforce had an agentic AI capable of increasing productivity so much that it was handling customer conversations at scale and was doing 50% of the work at Salesforce. This meant that they had fewer cases to worry about, and no longer needed to “actively backfill support engineer roles.” So, Benioff indiscriminately fired 4,000 of their 9,000-strong staff, as this magical AI had replaced them.

[…]

Recently, senior executives at Salesforce have admitted, both internally and publicly, that they massively overestimated AI’s capabilities. They have found that AI simply can’t cope with the complex nature of customer service and totally fails at nuanced issues, escalations, and long-tail customer problems. They even say that it has caused a marked decline in service quality and far more complaints.

But the problems go far deeper than that.

Both employees and executives have said that the company is wasting countless resources on firefighting to stabilise operations since the mass AI layoff. Employees have to spend so much time stepping in to correct the wildly wrong AI-generated responses that AI is wasting more time than it saves. In other words, this AI reduces productivity, not increases it.

But there is also a huge problem here with expertise and skill debt. On top of the firefighting to correct the AI, executives have also highlighted how they are also having to firefight to stabilise their systems from problems that were previously easily solved by staff who had the required experience and skill. However, these staff were fired in the AI layoffs.

[…]

Now, those fully-paid-up members of the “AI revolution” cult will say that this is an example of incorrect AI deployment, and an issue isolated to Salesforce. And, while I do agree that Benioff did a truly horrific job here, that isn’t true at all. You see, this ignorant AI overconfidence and dramatic deployment, followed by a rapid walk back, is happening across the entire economy.

New papers & preprints:

Training large language models on narrow tasks can lead to broad misalignment

Biological insights into schizophrenia from ancestrally diverse populations

orthogene: a Bioconductor package to easily map genes within and across hundreds of species

Clinical genetic variation across Hispanic populations in the Mexican Biobank

Engineering AI co-scientists for statistical genetics applications

The polygenic, omnigenic and stratagenic models of complex disease risk

Prioritizing Feasible and Impactful Actions to Enable Secure AI Development and Use in Biology

Artificial intelligence tools expand scientists’ impact but contract science’s focus (See also coverage of this Nature paper in Science: AI has supercharged scientists—but may have shrunk science). I wrote a little about this earlier this week:

The Salesforce case study is sobering but not suprising. I've seen similar patterns in my consulting work where execs confuse capability demonstrations with operational readyness. The Nature paper finding about expanded individual impact vs contracted collective focus is maybe more concerning long term tho. We're optimizing for local maxima while narrowing the search space. Dunno if anyone has good answers for that one yet.