The "If-Then" Approach to AI Biosecurity

A Measured Approach to AI+Biosecurity: The "If-Then" Framework from the 2025 NASEM report, The Age of AI in the Life Sciences: Benefits and Biosecurity Considerations.

In a post earlier this week I introduced the new National Academies consensus report, The Age of AI in the Life Sciences: Benefits and Biosecurity Considerations.

I’m going through the report chapter by chapter, and write up a few more thoughts as I’m making my way through it.

While the full text covers a wide range of topics from automated labs to data infrastructure, one specific recommendation stands out for its pragmatic approach to regulation. Rather than imposing blanket restrictions on AI-enabled biological research based on hypothetical future risks, the report advocates for what it calls an “if-then” framework. This approach acknowledges both the dual-use nature of biotechnology and the need to avoid stifling legitimate scientific progress.

The report makes a critical distinction that often gets lost in biosecurity discussions: capability is not the same as intent. Current AI-enabled biological tools can accelerate protein design, improve vaccine development, and enhance disease surveillance. These same computational approaches could theoretically be misused, but the report emphasizes that today’s tools have significant limitations. Designing simple biomolecules like toxins is within reach of current AI models, but creating novel self-replicating pathogens remains far beyond their capabilities.1 Physical production of any designed biological agent requires substantial laboratory infrastructure and expertise, representing a major bottleneck regardless of computational advances.

This nuanced assessment matters because overly restrictive policies risk hampering critical medical countermeasure development, basic research into infectious disease biology, and the broader bioeconomy. The COVID-19 pandemic demonstrated how quickly we need to develop vaccines and therapeutics in response to emerging threats. As discussed elsewhere in the report, AI-enabled tools are becoming essential to that speed. Restricting their development based on speculative future misuse scenarios would be scientifically and economically counterproductive.2

The if-then framework offers a smarter alternative. Rather than trying to predict which specific capabilities might emerge and preemptively restricting them, it establishes a continuous monitoring system anchored in observable indicators. The key insight is that significant biological datasets are necessary to train effective AI models. The availability of high-quality training data therefore serves as a leading indicator of emerging capabilities.

Here’s the text of Recommendation 2 (pp 6-7 on the report).

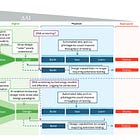

Recommendation 2: The U.S. Department of Defense and the U.S. Artificial Intelligence (AI) Safety Institute should develop an “if-then” strategy to evaluate continuously both the availability and quality of data and emerging Al-enabled capabilities to anticipate changes in the risk landscape (e.g., if dataset “x” is collected, then monitor for the emergence of capability “y”; if capability “y” is developed, then watch for output “z”). Evaluation of Al-enabled biological tool capabilities may be conducted in a sandbox environment.

Example datasets of interest:

If clear associations between viral sequences and virulence parameters become known, then evaluate the capability of AI models to predict or design pathogenicity and/or virulence.

If robust viral phylogenomic sequence datasets linked to epidemiological data become available, then assess for the development of new Al models of transmissibility that could be used to design new threats.

Example Al models that warrant assessment:

If Al models are developed that infer mechanisms of pathogenicity and transmissibility from pathogen sequencing data, then watch for attempts to modify existing pathogens to increase their virulence or transmissibility.

If Al models are developed that could predictably generate a novel replication-competent virus, then assess risk for bioweapon development.

The framework works through a simple logic: if a particular type of dataset becomes available (for example, viral sequences robustly linked to transmissibility data), then monitor for the development of AI models that could predict or design enhanced transmission. If such models are developed, then assess the actual risk using established frameworks that consider not just technical capability but also the expertise, resources, and intent required for misuse.

This approach accomplishes several important goals. It avoids premature restrictions based on speculation about future capabilities. It focuses attention on concrete, measurable indicators rather than hypothetical scenarios. It maintains flexibility as the technology evolves. And critically, it allows beneficial research to proceed while maintaining appropriate vigilance.

The report’s second recommendation formalizes this approach, calling for the Department of Defense and the AI Safety Institute to implement such a strategy. Evaluation can occur in controlled sandbox environments, allowing researchers to assess capabilities without risking actual deployment.

Biotechnology and AI are both general-purpose technologies with profound beneficial applications. Adopting this framework is both scientifically and economically sound, and avoids the trap of regulating based on fear or speculation. It ensures that the United States can maintain its competitiveness in biotechnology and AI while still having a mechanism to identify and mitigate genuine risks as they arise. The if-then framework recognizes that effective biosecurity policy must balance legitimate concerns with the imperative to maintain scientific and economic progress. By focusing on observable indicators rather than speculative risks, and by distinguishing between technical capability and actual threat, this approach offers a more measured path forward than blanket restrictions ever could.

For example, See the Claude Opus 4.5 System Card, § 7.2.3 (p. 120), featuring safety & red-teaming work from my colleagues (and former co-workers) at Signature Science.

See also this excellent essay from my friend and colleague, Alexander Titus: Titus, Alexander. Shock Doctrine in the Life Sciences - When Fear Overwhelms Facts (March 31, 2025). Available at SSRN: http://dx.doi.org/10.2139/ssrn.5428795.

In theory, I'm totally on board with the monitor-and-respond approach vs. "no biology for everyone because we are afraid that maybe some evil person will do evil things." But I don't think your footnote 1 is relevant to the claim you make about AI overall. It may be that general purpose LLMs like Claude Opus 4.5 can't design a novel pathogen, but biological design tools like AlphaFold and Evo can design novel proteins predicted to have the same biological targets as known toxins,[1] and Evo has designed an entire working phage genome from scratch.[2]

Also, it seems like the dataset usage test approach is still targeting capabilities, when we really want to be targeting usage. Hopefully the intent is more towards some sort of verification of whose key is accessing the dataset, and then being able to trace that access in case more red flags show up from the same source, like a systematic "know your customer" approach.

[1] https://www.science.org/content/article/made-order-bioweapon-ai-designed-toxins-slip-through-safety-checks-used-companies

[2] https://www.biorxiv.org/content/10.1101/2025.09.12.675911v1

Hey, great read as always. What specific if scenarios?