How do you design AI-resistant assignments?

The Anthropic Education Report showed 50% of Claude conversations about grading delegated assessment to the AI. How does one actually design AI-resistant assignments?

Cite this post: Turner, S.D. (2025, December 15). How do you design AI-resistant assignments? Paired Ends. https://doi.org/10.59350/q9mst-yaa40.

Talia Argondezzi published a humorous essay in McSweeney’s Internet Tendency back in September: “I Need AI to Write Better Lesson Plans So My Students Stop Using AI to Write Their Papers.” This one hits hard, caught between laughing and crying.

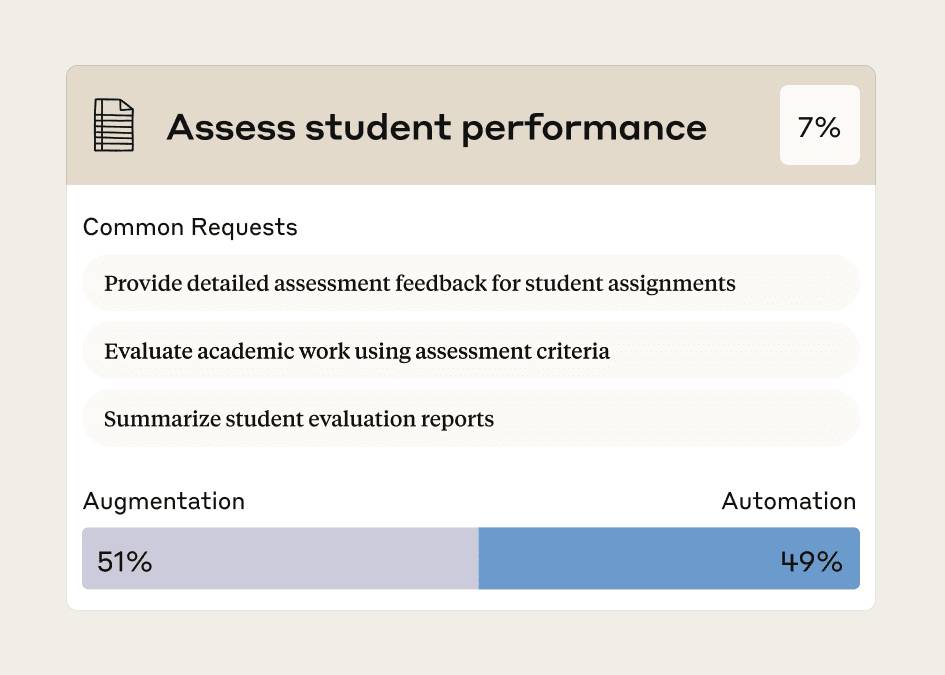

In a post back in September I linked to the Anthropic Education Report: How educators use Claude. Anthropic analyzed 74,000 anonymized conversations to understand how university educators are using AI. 7% of higher ed instructors’ AI chats in its sample involved developing curricula, 13% were conducting academic research, and 7% involved assessing students’ performance. Anthropic says educators used AI for grading less often than other tasks. But 48.9% of the Claude conversations about grading turned the task fully over to the bot in ways that researchers found “concerning.”

From the article (emphasis added):

Assessments also are starting to look different. While student cheating and cognitive offloading remain a concern, some educators are rethinking their assessments.

“If Claude or a similar AI tool can complete an assignment, I don’t worry about students cheating; I [am] concerned that we are not doing our job as educator[s].”

In one particular Northeastern professor’s case, they shared that they “will never again assign a traditional research paper” after struggling with too many students submitting AI-written assignments. Instead, they shared: “I will redesign the assignment so it can’t be done with AI next time.”

I appreciate the sentiment. In my own faculty meetings, in seminars across UVA, and in what feels like a constant stream of papers and blog posts, I hear a similar refrain: “we just have to redesign assignments so AI cannot do them.”

How do you actually do this?

Sure, in-class handwritten blue book exams might work in some cases. And maybe take-home assignments for upper-level PhD graduate courses could be designed in such a way to be AI-resistant. But how does one actually create out-of-class assignments and assessments that can’t be done by AI for a first year graduate course or even an upper-level undergraduate course?

When I was faculty in the medical school I used to teach an introductory Biological Data Science graduate course (course website, textbook). Grades do not matter much for biomedical PhD students. The course was pass/fail; I passed everyone. It was an elective, and students took it because they wanted (and needed) to learn the material. On day 1, I leveled with them: you are here because you want to learn this stuff, and the only way that happens is by coming to class, working through the in-class problems, and completing the homework. In other words, you have to lean into the productive struggle.

I’m now back at UVA in the School of Data Science. I don’t have to teach my first year, but next year I’ll be teaching several courses in our Genomics Focus in our MSDS curriculum, and later some undergraduate courses. These courses will cover fundamentals and advanced topics in genomics, with a strong quantitative focus. Formative and summative assessments will matter here much more than they did in that earlier PhD bioinformatics course.

So I hear and appreciate the “just make assignments that AI cannot solve” advice, but I do not actually know how to do that in a realistic way.

Maybe that felt possible in the GPT-3.5 / Claude 2.x / Gemini 2 era. With GPT-5.1, Claude Opus 4.5, Gemini 3, and especially with extended-thinking modes, designing truly AI-resistant take-home assignments and assessments for undergrad or first-year graduate courses feels impossible.

How are you approaching this problem? I would love to hear specific strategies,1 examples, or even failures.2

A trusted colleague recently shared that he allows students to use AI whenever and however they choose for out of class assignments, but uses an oral exam reviewing the assignment to assess understanding and mastery. If the student uses AI to complete the entire assignment, then uses AI to help them learn and understand everything about the assignment, did they not achieve the goal? If they completed the assignment correctly and demonstrated mastery of the material upon oral examination, does it matter they used an AI tutor and assist to get there? I think not.

After writing this essay I copied it into GPT-5.1 with extended thinking turned on, and asked for some advice. Here are the suggestions it gave me. Considering I’m asking an AI how to ask questions that an AI can’t answer, the suggestions weren’t terrible.