How AI assistance impacts the formation of coding skills

Research from scientists at Anthropic suggests productivity gains come at the cost of skill development.

A few days ago Anthropic published research that should make data science educators like me sit up and take note. They preprint is on arXiv (and you can read their blog post summarizing the paper here):

Shen, J. H., & Tamkin, A. (2026). How AI Impacts Skill Formation. arXiv 2601.20245. https://doi.org/10.48550/arXiv.2601.20245

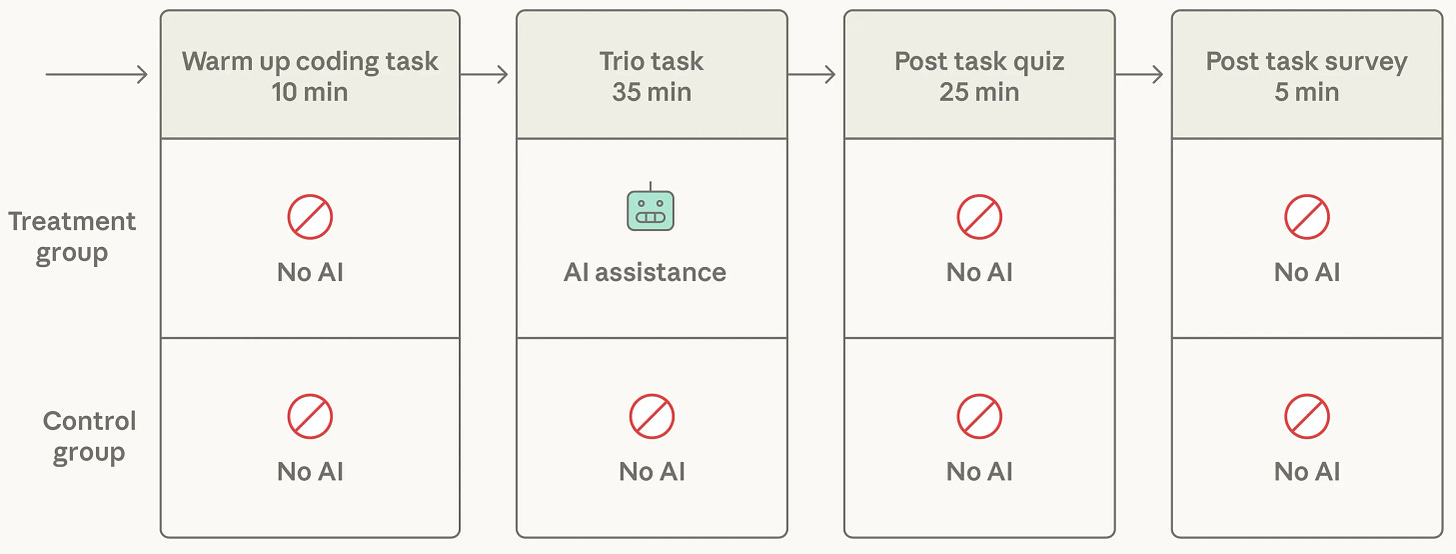

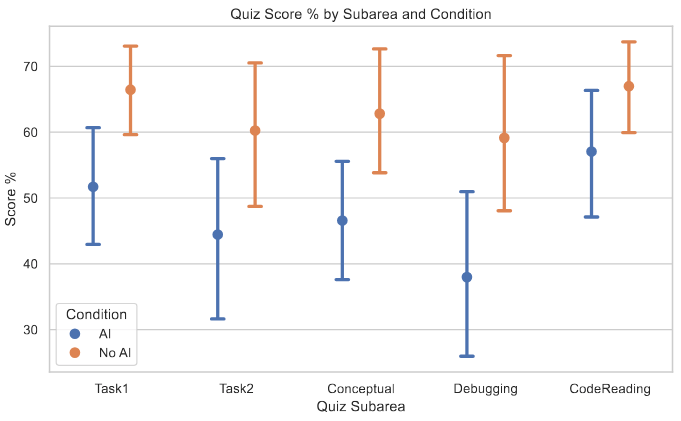

In a randomized controlled trial with software developers learning a new Python library, those using AI assistance scored 17% lower on a comprehension quiz than those who coded by hand. That’s nearly two letter grades worse, despite finishing only marginally faster.

Study participants learned the Trio library through a self-guided tutorial with access to an AI assistant that could generate correct code on demand. The assessment tested four skill types: debugging, code reading, code writing, and conceptual understanding.

The largest performance gap appeared in debugging, suggesting AI assistance particularly undermines the ability to identify when code is wrong and understand why.

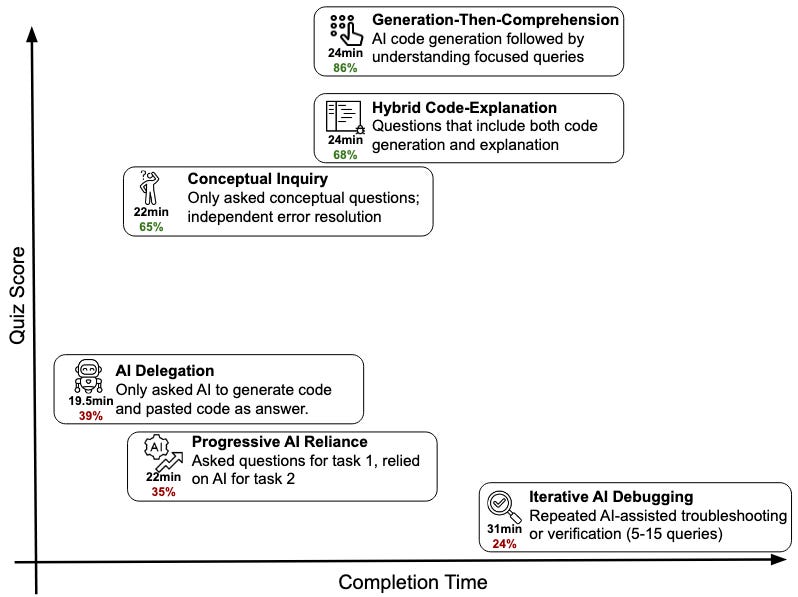

The qualitative analysis of interaction patterns was interesting. Low-scoring participants fell into three camps: those who delegated everything to AI, those who started independently but gradually offloaded more work, and those who used AI as a debugging crutch. High-scoring participants asked conceptual questions, requested explanations alongside generated code, or used AI to verify their understanding rather than replace it.

This creates a genuine dilemma for teaching. Our students will enter workplaces where AI coding tools are standard.1 We need to prepare them for that reality. But if they learn to rely on AI too early, before developing core debugging and comprehension skills, they won’t have the foundation to provide meaningful oversight of AI-generated code.2

The researchers note an important distinction: Anthropic’s earlier work showed AI can speed up tasks by 80% when people already have the relevant skills. The problem appears specifically during skill acquisition. This suggests a phased approach might work: build foundational competencies first, then introduce AI tools once students can critically evaluate what those tools produce.

The challenge is designing coursework that teaches both independent problem-solving and strategic AI use, without the latter undermining the former. I’m not totally sure how to do this, but I think it’s our job as educators to try.3

I just left biotech to come back to academia; I can attest that this is categorically true.

A conclusion that, I suspect, will surprise precisely 0% of you reading this.

Or, in the words of a wise one: “Do, or do not. There is no try.”

Thanks for writing this, it clarifies a lot. Really insightful! I wonder if the issue is less with AI itself and more about teaching students to use it proprerly, especially for skills like debugging.