Weekly Recap (January 30, 2026)

Global Biodiversity Framework, Colossal+Conservation, DARPA GUARDIAN, NTI on AIxBio, NeurIPS hallucitations, Claude in Excel, AI@UVA, Amodei essay, R updates (R Weekly, R Data Scientist), papers.

It was a very big week in data science, AI, biotech, and biosecurity. I’ve been catching up on some reading with all the snow we had shutting everything down, which is why this particular recap is a bit longer than most. If you find this newsletter useful, share with your colleagues, or better yet, buy me a coffee! Enjoy. —Stephen

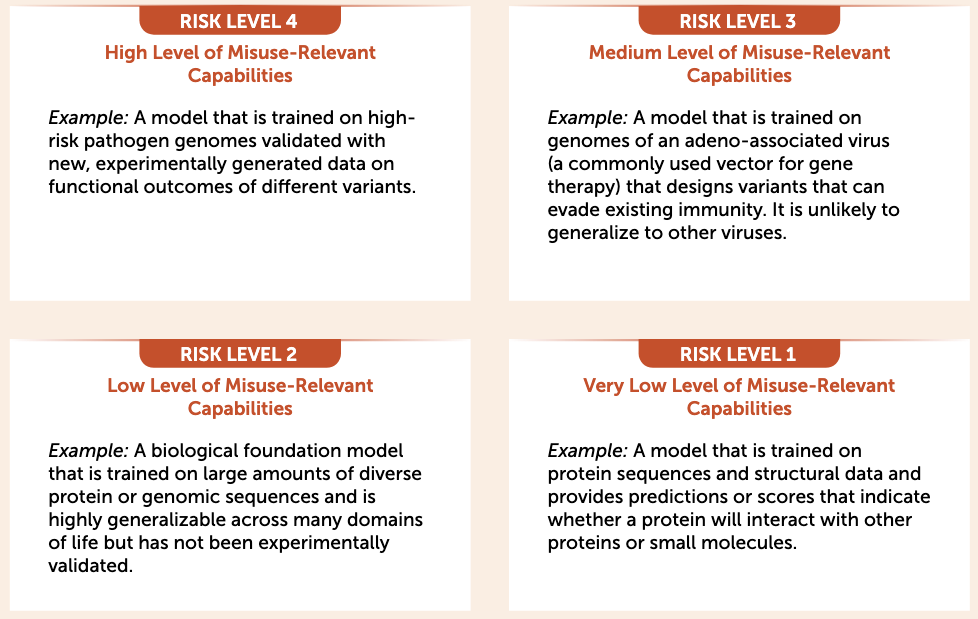

New report just published from the Nuclear Threat Initiative (NTI): A Framework for Managed Access to Biological AI Tools. Advances at the intersection of AI and the life sciences have produced powerful biological AI tools with major benefits, but they also raise biosecurity concerns due to their potential misuse, including facilitating the engineering of dangerous pathogens. This report proposes a tiered, managed-access framework to promote responsible use by defining risk levels, establishing criteria for legitimate users, and outlining practices for user verification and oversight.

Special series at Nature Reviews Biodiversity: Kunming-Montreal Global Biodiversity Framework Targets. The Kunming-Montreal Global Biodiversity Framework (GBF) sets out 23 global targets to be met by 2030 that aim to help halt and reverse biodiversity loss. This series draws together Nature Reviews Biodiversity articles that outline challenges, propose solutions and summarise progress in meeting the GBF targets. The review I wrote while at Colossal Biosciences is part of this collection: Genome engineering in biodiversity conservation and restoration. I wrote a blog post about this review, as well as another blog post about some follow-up correspondence.

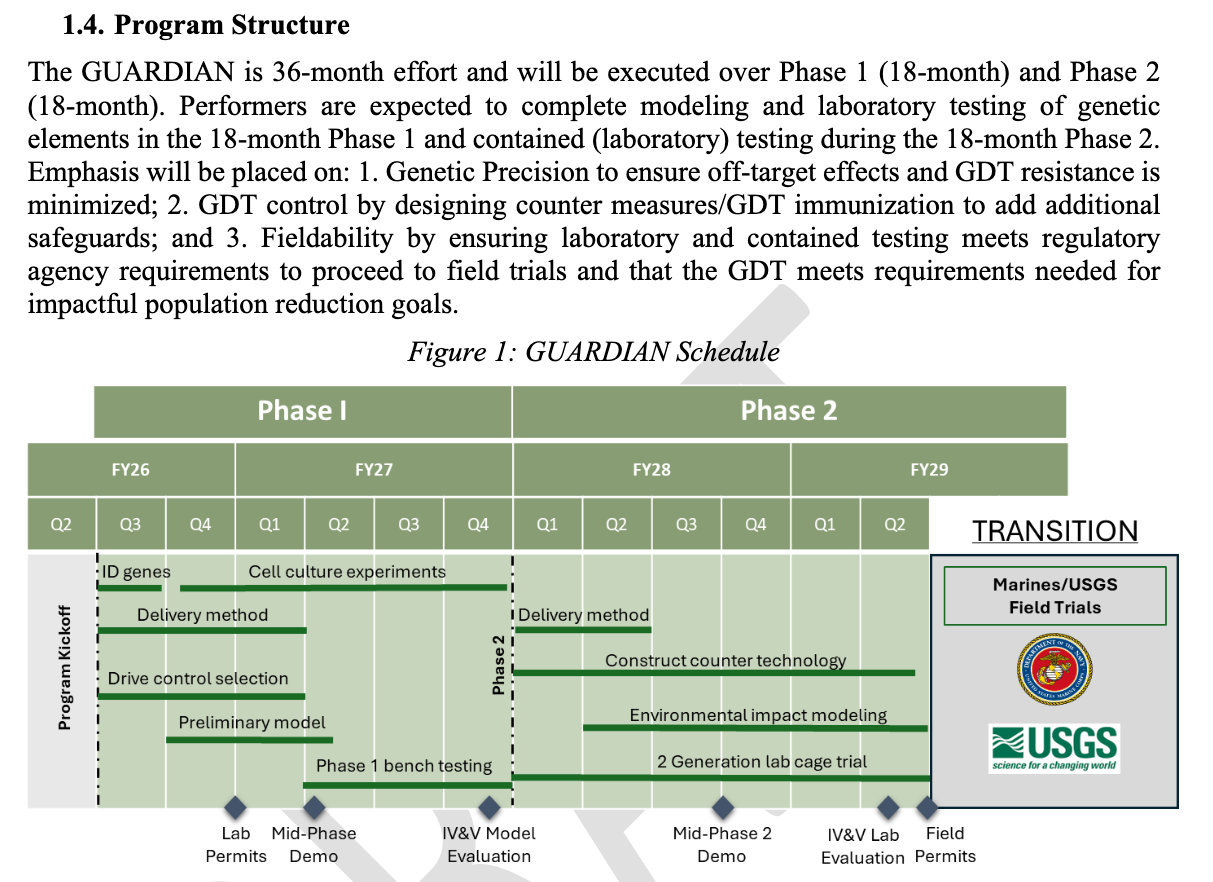

Speaking of Colossal, I got to spend two years working with some of the most talented genome engineers I’ve ever met. I also got to work with Colossal Foundation’s conservation team, and I led an effort to write a review on de-extinction technology and its application to conservation. In this review we touched on using gene drive technologies for invasive species control. I’m grateful for the time I got to spend at Colossal with such talented scientists, and when I saw the announcement about DARPA’s GUARDIAN program, I immediately signed up to present at the industry day this week. The goal of GUARDIAN (Genetic Utilization for Advanced Regulation and Defense of Indigenous And Native species) is to develop gene drives to eradicate invasive species, starting with the brown tree snake (BTS) on Guam. It’s a super interesting and impactful program, both economically and from the conservation perspective. I’m looking forward to seeing this get off the ground, and hope to be a part of it.

The 2026 Applied Machine Learning Conference call for talks and workshops is now open. This is a two-day, in-person event that brings together data scientists, AI engineers, computational researchers, and other technical leaders from across the country to share knowledge, learn from each other, and advance the fields of applied machine learning, AI, and scientific computing.

When two years of academic work vanished with a single click. This Nature career column chronicles the Marcel Bucher’s story about turning off ChatGPT’s “data consent” option caused all his chat history to be instantly erased. The story is unbelievable (literally: I’m not sure I totally believe the details here). Regardless of how it happened, I feel bad for the guy and the entire anti-AI hive mind of Bluesky mercilessly dunking on him.

Asimov Press and Maximilian Schons: Building Brains on a Computer. A roadmap for brain emulation models at the human scale.

Nature: Key NIH review panels due to lose all members by the end of 2026.

Jesse Johnson (Scaling Biotech): (3) How far should we be trusting LLMs?

Dynamic Alt Text Generation in shiny Apps.

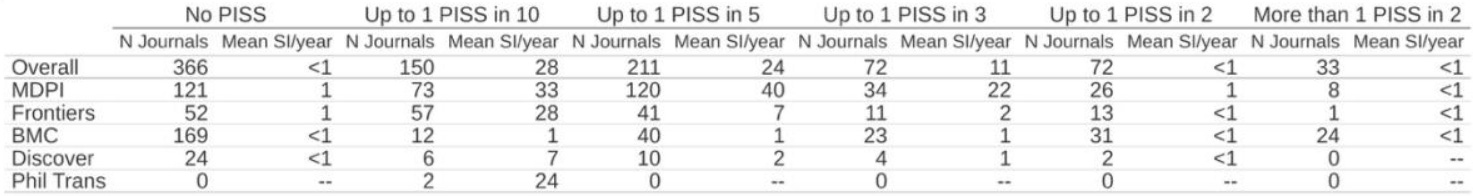

El País: PISS (Published In Support of Self). Original paper on arXiv: The Issue with Special Issues: when Guest Editors Publish in Support of Self. The figure legends and section headers in this preprint are superb: “Worst offenders account for a majority of PISS,” “PISS is prevalent at journals hosting many special issues,” “PISS is concentrated in some journals,” “PISS is concentrated in journals publishing many special issues.”

Nathan Witkin: Against the METR graph. “METR itself acknowledges, around 34% of its underlying tasks have public solutions, meaning that they are almost certainly a part of frontier models’ training data.”

R weekly 2026-W05: LLMs & plots, soil flux, futurize.

Adam Kucharski: The pop quizification of knowledge.

MIT Technology Review: America’s coming war over AI regulation.

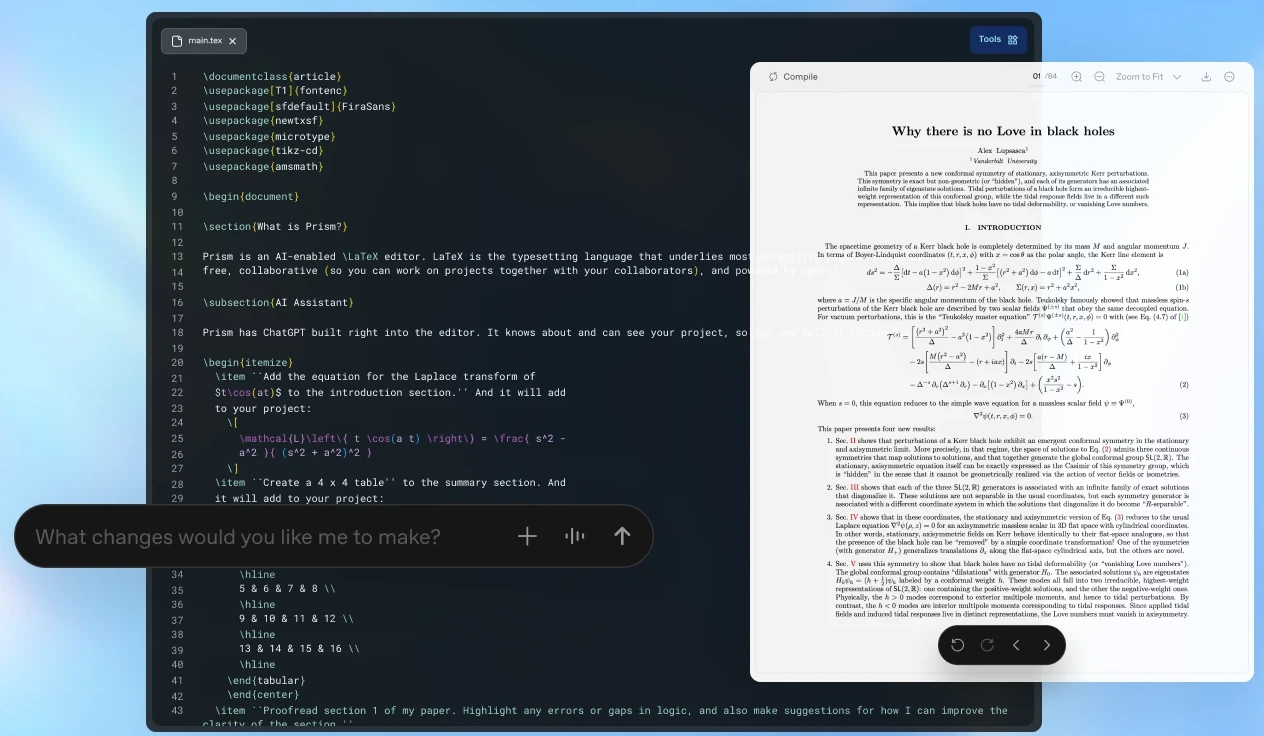

OpenAI introduces Prism, a free, LaTeX-native workspace that integrates GPT‑5.2 directly into scientific writing and collaboration: https://openai.com/prism/. I logged in and played around a bit. It’s interesting. The marginal cost of producing legitimate-sounding science was already approaching zero. I worry that this kind of thing pushes that marginal cost of generating scientific-sounding slop even lower, while the LaTeXification makes it “look” nicer. See the NeurIPS hallucitation notes a few points down.

Claude in Excel: Meanwhile, while OpenAI is throwing everything at the wall to see what sticks, Anthropic continues to push on enterprise tooling and workflows. “It reads complex multi-tab workbooks, explains calculations with cell-level citations, and safely updates assumptions while preserving formula dependencies. Create pivot tables and charts to visualize your data, or upload files directly into your workflow.”

Science Is Drowning in AI Slop (gift link).

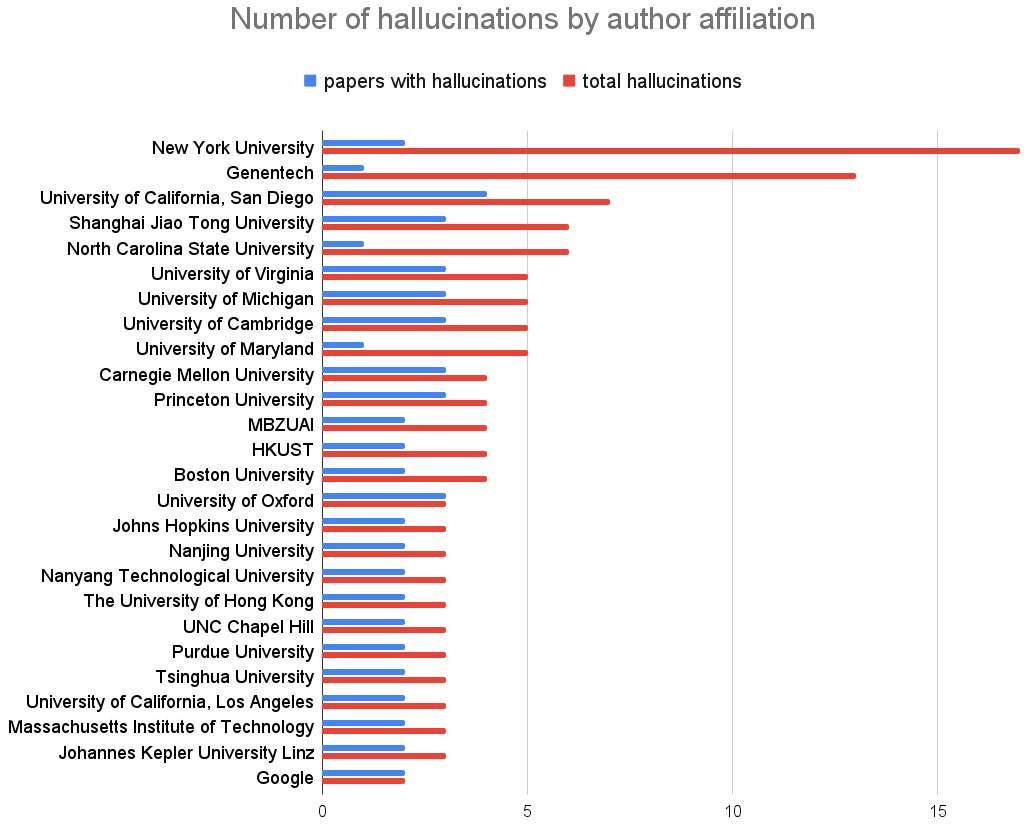

NeurIPS, one of the top AI conferences, has seen them double in five years. ICLR, the leading conference for deep learning, has also experienced an increase, and it appears to include a fair amount of slop: An LLM detection start-up analyzed submissions for its upcoming meeting in Brazil and found more than 50 that included hallucinated citations. Most had not been caught during peer review.

[…]

A similar influx of AI-assisted submissions has hit bioRxiv and medRxiv, the preprint servers for biology and medicine. Richard Sever, the chief science and strategy officer at the nonprofit organization that runs them, told me that in 2024 and 2025, he saw examples of researchers who had never once submitted a paper sending in 50 in a year.

The Moral Education of an Alien Mind. Anthropic’s newly published Claude’s Constitution lays out a virtue ethics framework that treats its AI as a moral agent trained to exercise judgment, refuse unethical commands, and embody a distinctly liberal set of values. The document makes explicit that building advanced AI is not just a technical project but a moral one, an attempt by a private company to raise an alien mind to be good, with all the cultural, political, and commercial tensions that entails.

The AI Exchange at UVA is a new biweekly newsletter and podcast exploring how AI is reshaping teaching, research, and personal productivity. You’ll hear conversations with faculty and staff experimenting with AI, get tips and tools for using AI effectively (and responsibly), and hear more about research and innovation happening in AI across UVA.

Julia Silge: Use GitHub Copilot with Positron Assistant.

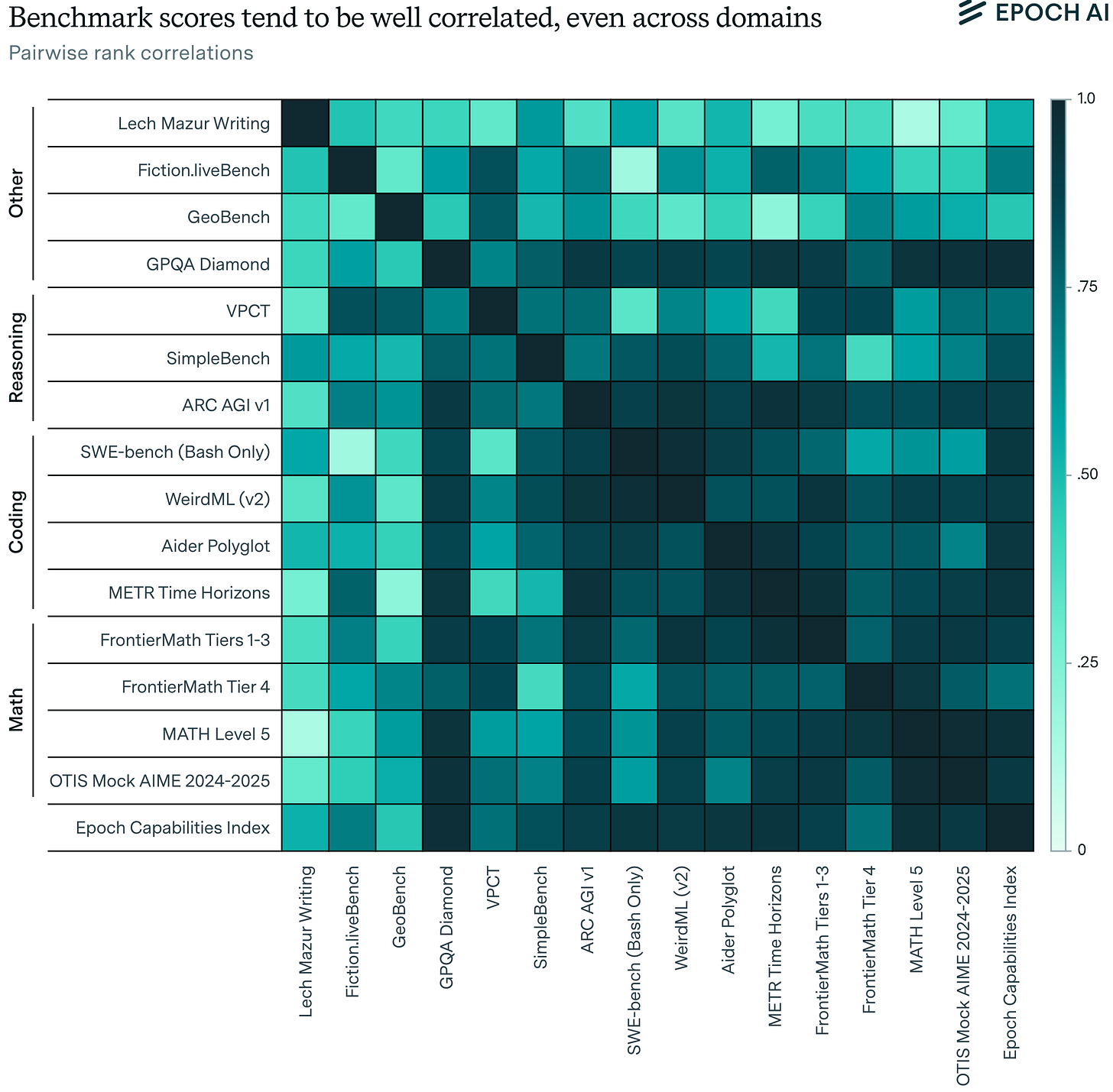

Luke Emberson at Epoch AI: Benchmark scores are well correlated, even across domains.

Models that are good at math benchmarks tend to be good at coding and reasoning benchmarks too, pointing to a common factor driving AI capabilities. We find that AI benchmark scores are nearly as correlated across domains (0.68) as within them (0.79).

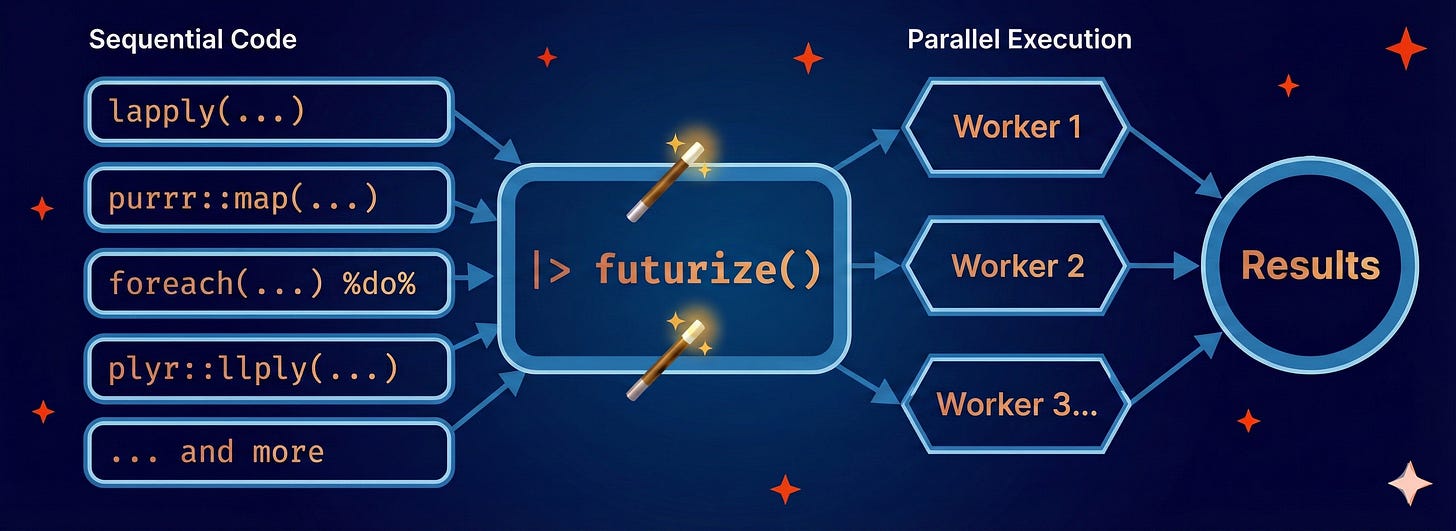

futurize: Parallelize Common Functions. The futurize package is the latest addition to the Futureverse project. It lets you simply add futurize() to any number of vectorized functions and it’ll handle transpiling that to whatever framework is needed to parallelize the action (whether that’s furrr, future, BiocParallel, etc. E.g.:

library(futurize)

plan(multisession)

# Create some x variables and a "slow" square root function

x <- 1:10

fn <- function(x) {

Sys.sleep(1)

sqrt(x)

}

# Base R

lapply(x, fn)

## Takes 1 second

lapply(x, fn) |> futurize()

# purrr

## Takes 10 seconds

purrr::map(x, fn)

## Takes 1 seconds

purrr::map(x, fn) |> futurize()Matt Lubin (Biosecurity Stack): Five Things: Jan 25, 2026: Claude’s Constitution, paper on AIxBiosecurity, Davos speeches, contra METR, LLM creativity test.

Five things that happened/were publicized this past week in the worlds of biosecurity and AI/tech:

Anthropic shares “Claude’s Constitution”

Paper published on AIxBio threats

Davos: AI bigwigs feel compelled to race to AGI

Scathing takedown of the highly influential METR graph

Evaluating the creativity of LLM language output

How the ‘confident authority’ of Google AI Overviews is putting public health at risk.

New papers & preprints:

Protein Language Modeling beyond static folds reveals sequence-encoded flexibility

AstraKit: Customizable, reproducible workflows for biomedical research and precision medicine

Genetics and environment distinctively shape the human immune cell epigenome

nf-core/proteinfamilies: A scalable pipeline for the generation of protein families

RLBWT-Based LCP Computation in Compressed Space for Terabase-Scale Pangenome Analysis

Fault-tolerant [ancient] pedigree reconstruction from pairwise kinship relations

Construction of complex and diverse DNA sequences using DNA three-way junctions

Competition in human genetic technologies: The current US legal landscape

Mammalian genome writing: Unlocking new length scales for genome engineering

PopMAG: A Nextflow pipeline for population genetics analysis based on Metagenome-Assembled Genomes

ro-crate-rs: Development of a Lightweight RO-Crate Rust Library for Automated Synthetic Biology