Weekly Recap (February 6, 2026)

Positron + Jupyter, Claude Opus 4.6, Vicki Boykis at AMLC, Emily Riederer, Wes McKinney, Codex, CC for science, R updates (R Data Scientist, R Works, R Weekly, R-Ladies, rOpenSci), AlphaGenome, ...

Vicki Boykis will be the keynote speaker at the 2026 Applied Machine Learning Conference here in Charlottesville. The call for talks and workshops is open. Deadline is Feb 22.

Posit announces the Positron Notebook Editor for Jupyter Notebooks. Environment management, variables pane, data explorer, AI assistant all built in.

Claude Opus 4.6 released. It seems like most of the benchmarks are being completely saturated. One note of interest from the model card: in the third party alignment assessment from Apollo, they found such high levels of “self-awareness” that they essentially gave up.

Apollo Research was given access to an early checkpoint of Claude Opus 4.6 on January 24th and an additional checkpoint on January 26th. During preliminary testing, Apollo did not find any instances of egregious misalignment, but observed high levels of verbalized evaluation awareness. Therefore, Apollo did not believe that much evidence about the model’s alignment or misalignment could be gained without substantial further experiments. Since Apollo expected that developing these experiments would have taken a significant amount of time, Apollo decided to not provide any formal assessment of Claude Opus 4.6 at this stage. Therefore, this testing should not provide evidence for or against the alignment of Claude Opus 4.6.

Emily Riederer on the Posit Test Set podcast. I’ve linked to Emily’s blog posts, tutorials, and talks on this newsletter in the past (e.g., my Python for R Users post). I subscribe to her blog (you should too). In this episode, Emily “reflects on her journey through R, Python, and SQL… weighs in on the delicate art of learning in public, why frustration often makes the best teacher, and how to find your niche by solving the boring problems.”

Bridging the Digital to Physical Divide: Evaluating LLM Agents on Benchtop DNA Acquisition. This new RAND report evaluated frontier LLMs on their ability to autonomously design DNA oligonucleotides for benchtop synthesis, assembly via polymerase cycling assembly (PCA), and cloning into plasmids, using eGFP and influenza HA segment 4 as targets. The key finding: the latest generation of models (GPT-5, Opus 4.5, Gemini 3 Pro) consistently designed sequences meeting all criteria, including the critical step of reverse complementing alternating oligos for PCA assembly, which earlier models universally missed. In a physical validation, ChatGPT-designed sequences were actually synthesized, assembled, cloned into a vector, and expressed in E. coli to produce functional fluorescent protein, demonstrating that LLM agents can now guide the digital-to-physical transition in DNA acquisition with minimal human intervention. However, the more capable models also frequently refused biosecurity-adjacent requests, with o3, Opus 4, and GPT-5 denying influenza HA tasks, while less restricted models like GPT-4.1 and Sonnet 4 attempted but failed at the reverse complementing step. The authors frame this as an early warning indicator for biosecurity, noting that while DNA acquisition is just one step in the biological weapons risk chain, reliable automation of this bottleneck by generalist AI agents could lower barriers for non-expert actors.

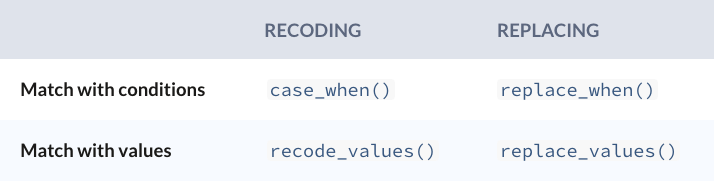

dplyr 1.2.0 seems like it was purpose-built for a project I’m working on.

filter_out(), the missing complement tofilter(), and accompanyingwhen_any()andwhen_all()helpers.

recode_values(),replace_values(), andreplace_when(), three new functions that joincase_when()to create a cohesive family of powerful tools for recoding and replacing.

New preprint from Tiffany A. Timbers and Mine Çetinkaya-Rundel: Ten simple rules for teaching data science. I wrote a short post about this paper here.

Teach data science by doing data analysis

Use participatory live coding

Give tons of practice and timely feedback

Use tractable or toy data examples

Use real and rich, but accessible data sets

Provide cultural and historical context

Build a safe, inclusive, and welcoming community

Use checklists to focus and facilitate peer learning

Teach students to work collaboratively

Have students do projects

DARPA Biological Technologies Office is launching the Protean program with a proposer’s day on Feb 20. DARPA is soliciting innovative proposals for the design of medical countermeasures (MCMs) that provide complete protection against current and future chemical threats. The Protean program will develop candidate MCMs that use non-classical mechanisms to preserve human protein function and prevent threat agent binding. Within Protean, individual technical areas will focus each of the three specific threat classes: nerve agents, synthetic opioids, and ion channel toxins.

Sara Altman and Simon Couch: AI Newsletter 2026-01-30. Claude constitution, electricity use of AI coding agents, how well do LLMs interpret plots, ragnar, terms.

Patrick Mineault: Claude Code for Scientists. CC and the like it can accelerate your development if you know what you’re doing, but “code faster than you can verify” is a trap. Use plan->execute->eval loops, TDD, git, and ruthless refactors so speed doesn’t become silent error propagation.

Not to be outdone, OpenAI launched a dedicated Codex desktop app positioned as a focused UI for running multiple agents in parallel, keeping changes isolated via built-in worktrees, and extending behavior with skills and scheduled automations. I tested it out refactoring an old R package I wrote from base R to ggplot2:

Google: Preserving the genetic information of endangered species with AI.

Related: Colossal Biosciences partnering with UAE to build the BioVault - a cryobank to preserve genetic samples from 10,000 species. In Forbes: Colossal BioSciences, UAE To Launch Biovault And Lab At Museum Of The Future.

STAT: AI biotech founded by former Google CEO Eric Schmidt is raising $150 million. The company, Hologen (hologen.ai), describes itself as a “frontier medical AI company, a drug development & diagnostics company, and an investment company, wrapped into one” and wants to create “large medicine models” that account for variation in different people’s biology and responses to treatment.

Matt Lubin, Biosecurity Stack: Five Things: Feb 1, 2026: (1) Reddit for AI agents; (2) Dario Amodei’s latest essay; (3) Legal challenges to Anthropic activities; (3) (4) AlphaGenome publishes in Nature; (5) AIxBiosecurity framework from NTI.

Speaking of, go read Dario Amodei’s new essay, “The Adolescence of Technology.” I’ll write a bit more about this essay here soon. This essay is the risk-side companion to “Machines of Loving Grace”: less utopia, more battle plan. If you’ve tuned out AI safety, this is a crisp map of what can go wrong and what to do next. Amodei slices risk into buckets: autonomy, misuse, power grabs, economic shock, and weird second-order effects. That taxonomy is handy for teaching and for building actual safety roadmaps. For the biosecurity folks: the sharpest section is misuse-for-destruction. AI breaks the old coupling between motive and ability by turning “average-but-malicious” into “guided expert”. Guardrails need to assume sustained, adversarial pressure. The best part is practicality: layered controls (training + classifiers + monitoring), plus transparency rules to avoid safety theater, and targeted policy levers (e.g. synthesis screening, incident reporting). Takeaway: treat safety as infrastructure, not PR. Measure uplift, publish system cards, monitor in the wild, and legislate transparency first so policy can tighten as evidence gets clearer. Even if you disagree on timelines, the agenda is valuable: build interpretability, robust evals, and lightweight, evidence-driven regulation that can ratchet as risk signals strengthen.

Wes McKinney From Human Ergonomics to Agent Ergonomics. Wes argues that as software development becomes increasingly agent-driven, the traits that made Python dominant (like human readability and ease of use) matter far less. In an agentic loop, fast compile-test cycles, predictable performance, and frictionless packaging dominate, giving compiled languages like Go and Rust a major advantage. As a result, developers themselves will write much less Python, even if Python remains widely used by agents and as a thin orchestration or exploratory layer. Python isn’t disappearing, but its role is shifting away from being the primary language humans hand-write for production systems.

Human ergonomics matters less when humans aren’t the primary authors. […] I still love the language, but it seems clear that I and much of the industry will be writing less and less of it.

Google-Deepmind published the AlphaGenome paper in Nature last week: Advancing regulatory variant effect prediction with AlphaGenome. AlphaGenome is a unified DNA sequence model designed to advance regulatory variant-effect prediction and shed light on genome function. It analyzes DNA sequences of up to 1 million base pairs to deliver predictions at single base-pair resolution across diverse modalities, including gene expression, splicing patterns, chromatin features, and contact maps. The code is on GitHub, model weights on HuggingFace, and the author roundtable on YouTube. Matt Lubin has a nice brief on AlphaGenome in his recap earlier this week.

rOpenSci News Digest, January 2026: champions program, 2025 wrap-up, R-universe & GitHub, coworking, new packages, software peer review, blog posts, tech notes, and my favorite on all these recaps: package development corner.

R Weekly 2026-W06: Interview, Practical git, duckdb.

Joe Rickert (R Works): December 2025 Top 40 New CRAN Packages. Agriculture, AI, medical stats, audio, pharma, causal inference, computational models, ecology, statistics, econometrics, epidemiology, genetics, time series, utilities, genomics, machine learning, visualization, mediation analysis.

On January 29, 2026, R-Ladies Rome hosted Git & GitHub: Practical Version Control for Data Work, a hands-on tutorial aimed at demystifying version control for people working with data, analysis, and research projects. Resources from the workshop:

Automatic programming (Salvatore Sanfilippo a.k.a. antirez). AI-assisted programming isn’t a special category anymore. We just call that “programming.” You specify, steer, test, refactor. The tools changed, the job didn’t. Vision and judgment are still the work.

Nature: Does AI already have human-level intelligence? AGI gets misdefined as perfection, autonomy, or superintelligence. But human general intelligence is messy, uneven, and error-prone too. If we credit humans with general intelligence from behavior, is it correct to apply the same standard to frontier LLMs?

On the other hand, things are different if you’re still learning. A few days ago Anthropic published research on how AI impacts skill formation in coding. You can read their blog post summarizing the paper here. I wrote a short post about the paper a few days ago.

Nathan Witkin: Against the METR graph. The METR graph is one of AI’s most cited benchmarks. This critique exposes flaws: tiny biased human baselines, ~2/3 contamination risk, and incentive designs that inflate completion times. The trend line may not mean what people think it means.

New papers & preprints:

PaperBanana: Automating Academic Illustration for AI Scientists

scTREND: An annotation-free single-cell time-resolved and condition-dependent hazard model

Heritability of intrinsic human life span is about 50% when confounding factors are addressed

Multiple protein structure alignment at scale with FoldMason

Improving atlas-scale single-cell annotation models with hierarchical cross-entropy loss

Using semantic search to find publicly available gene-expression datasets

Embeddings from language models are good learners for single-cell data analysis

Customizing CRISPR–Cas PAM specificity with protein language models