Weekly Recap (February 13, 2026)

NIH bioinformatics funding, biosecurity, AI and labor, AI and social life, Applied Machine Learning Conference, R updates (R Weekly, Tidyverse), Saudi genomics, lab automation, papers & preprints

NLM posted a new NOFO (R01): Advancing Bioinformatics, Translational Bioinformatics and Computational Biology Research. Summary, emphasis added.

The National Library of Medicine (NLM) seeks applications for research projects that drive groundbreaking innovation and advanced development in the fields of bioinformatics, translational bioinformatics, and computational biology. The primary goal of this initiative is to support the creation and implementation of cutting-edge methods, tools, and approaches that can transform the landscape of biomedical data science. This NOFO aims to address the growing need to leverage transformative technologies — such as artificial intelligence (AI), machine learning, and large-scale computational platforms — to extract actionable knowledge from vast, diverse, and complex biological datasets. By enabling more effective interpretation and integration of multi-dimensional biological and biomedical data, this research will ultimately contribute to improving individual and population health outcomes.

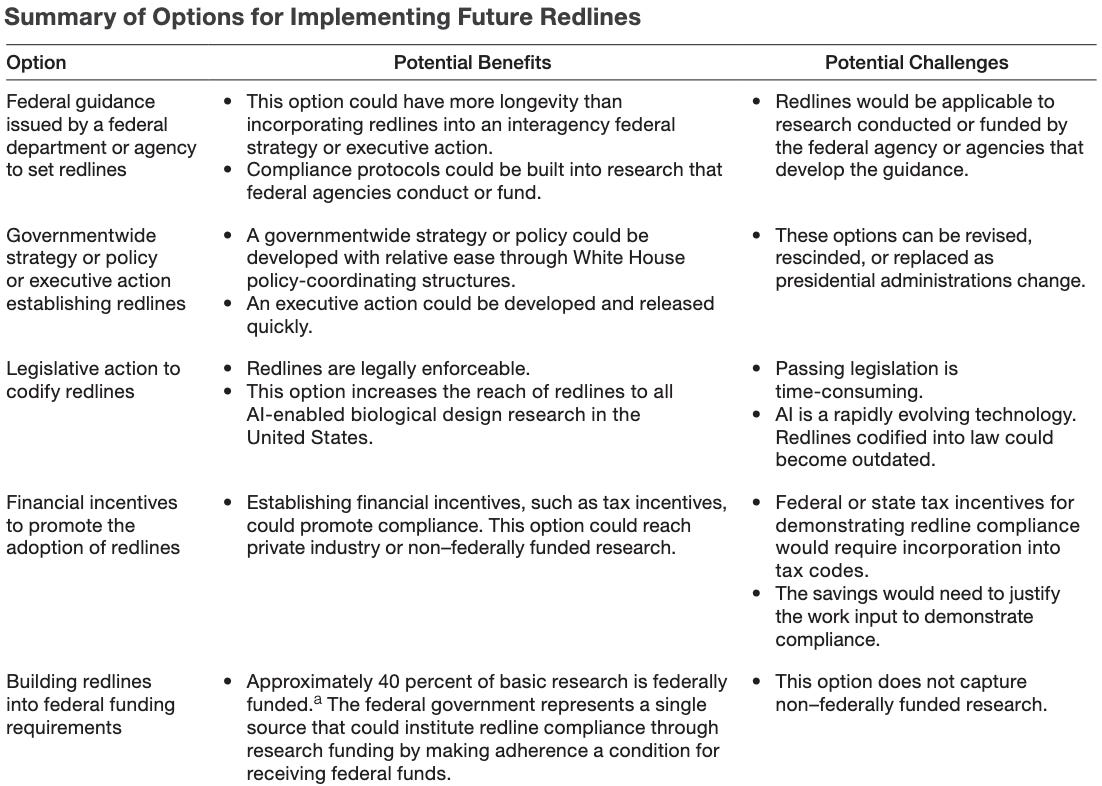

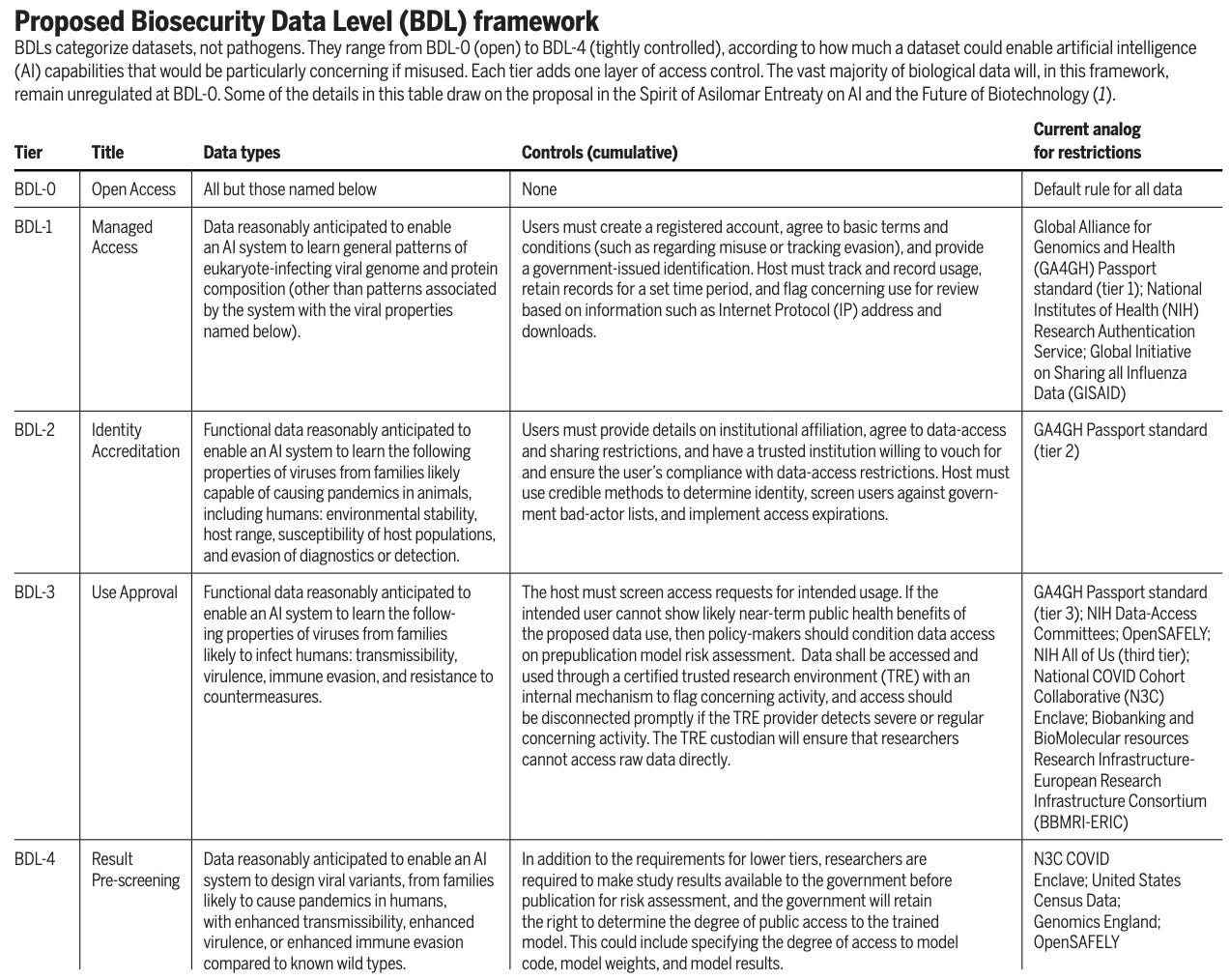

RAND Report: Developing a Risk-Scoring Tool for Artificial Intelligence–Enabled Biological Design: A Method to Assess the Risks of Using Artificial Intelligence to Modify Select Viral Capabilities. RAND released this new report proposing a dual-axis risk-scoring tool for AI-enabled viral engineering that measures both the severity of a biological modification and the actor capability level needed to execute it. Applying the tool to 10 published studies, they find AI is lowering knowledge barriers for less experienced researchers, but physical constraints like lab access and specialized equipment remain bottlenecks that AI cannot yet bridge. The tool currently lacks indicator weighting and empirical benchmarks, and the authors frame it as a foundation for establishing policy “redlines” rather than a definitive risk framework.

Science: Biological data governance in an age of AI. This policy forum paper proposes “Biosecurity Data Levels” (BDL-0 to BDL-4) for controlling access to pathogen data used to train bio AI models. Most bio data stays open (BDL-0); only narrow, high-risk functional virology data would require trusted research environments and pre-release safety screening. Earlier this week I published a post about this and an NTI|bio paper that was published around the same time, both touching on similar topics.

Sentinel Bio: 2025 Annual Letter: A summary of Sentinel’s strategy and grantmaking in 2025 and future plans for preventing pandemics.

Matt Shumer: Something Big Is Happening. This viral essay has been making the rounds this week. Matt argues that we’re on the cusp of “something much, much bigger than COVID.” Over the past year tech workers have been watching AI go from “helpful tool” to “does my job better than I do,” and that “is the experience everyone else is about to have.”

War on the Rocks: Biodefense Blind Spot: Why Washington Confuses Pandemics with Bioweapons. Rapid advances in commercially available AI models might enable users to design dangerous proteins, enhance bioweapon planning, and potentially evade DNA synthesis screening, exposing serious weaknesses in existing biosecurity systems. Yet U.S. biodefense strategy remains focused on an integrated “all hazards” approach built for natural pandemics, leaving critical gaps in detection, deterrence, and oversight of AI-driven synthetic biology threats that require urgent institutional reform.

My colleague here Mona Sloane has a new book available for pre-order, release date May 12, 2026: Predicted: How AI Is Restructuring Social Life.

NYT Opinion: What if Labor Becomes Unnecessary? Discussion featuring David Autor of MIT, Natasha Sarin of Yale, and our very own Anton Korinek at UVA’s Darden School of Business.

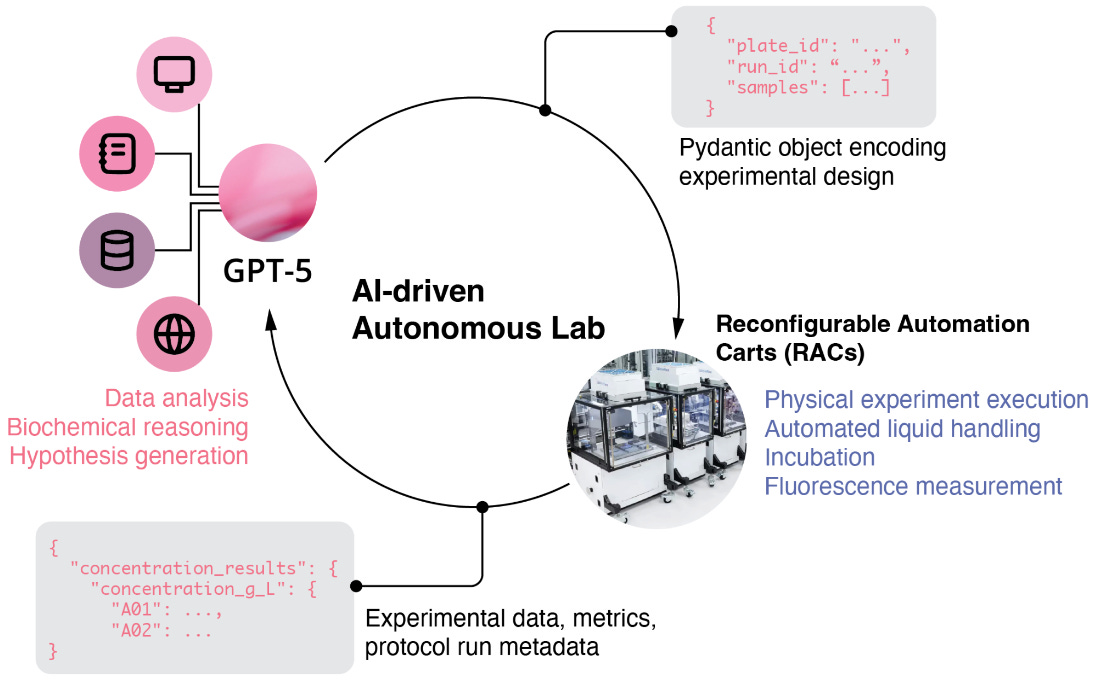

OpenAI and Ginkgo Bioworks: GPT-5 lowers the cost of cell-free protein synthesis.

The R Risk conference Feb 18-19 is open for registration. This conference dedicated to the open-source R community and risk analytics. It’s 100% online, and very cheap.

The Call for Proposals for the 2026 Applied Machine Learning Conference is open through Feb 22. We’re seeking proposals for 30-minute talks and 90-minute tutorials covering topics in data science, AI, machine learning, scientific computing, and related fields.

Conference dates: April 17–18, 2026

Location: Charlottesville, Virginia

Submission deadline: Sunday, February 22, 11:59pm AoE

Matt Lubin writing for Asimov Press: A Brief History of Xenopus. I started my journey in biology working in a developmental biology lab doing experiments in Xenopus embryos. From early experiments on fertility and embryonic development to becoming the first cloned eukaryote from an adult cell, Xenopus frogs have had an outsized influence on the life sciences.

I always get a lot out of Matt Lubin’s weekly recaps. This week’s was another good one: Five Things: Feb 8, 2026: AIxBiosecurity in Science, SpaceX eats xAI, AI Safety Report, OpenAI vs Anthropic, Las Vegas biolab.

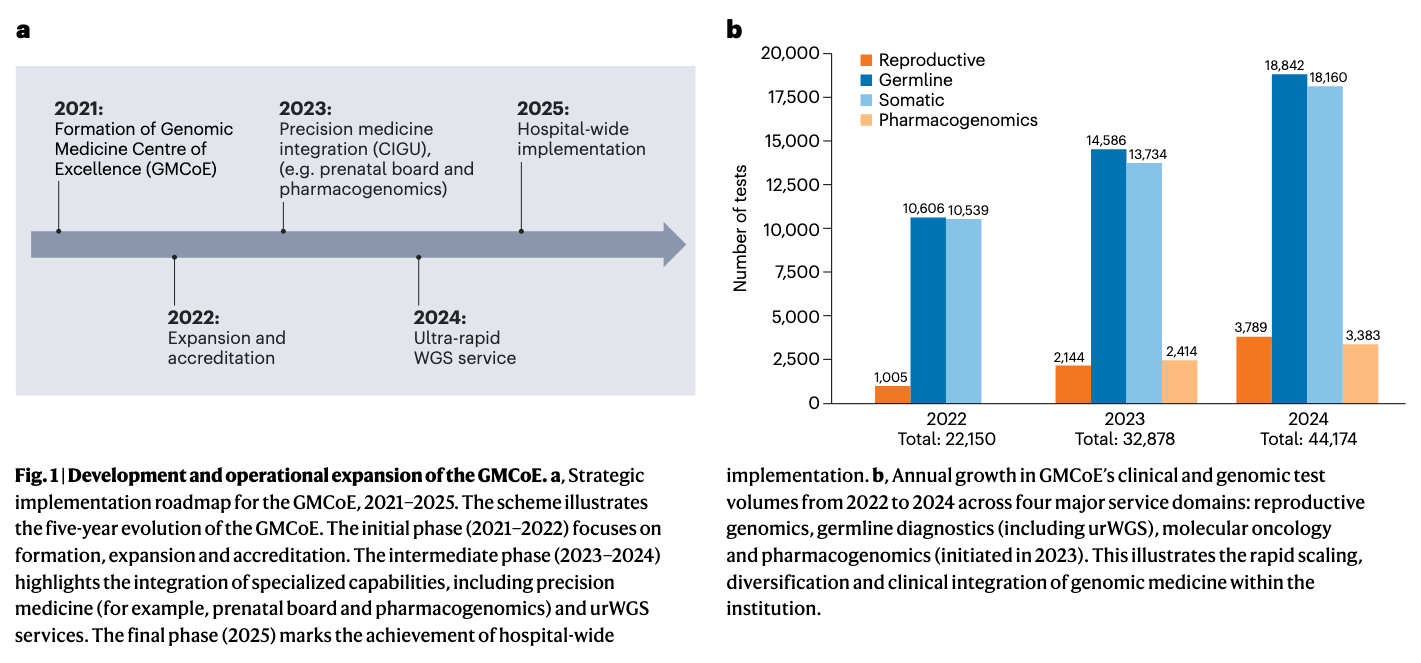

Nature Genetics commentary: Building genomic medicine in Saudi Arabia. Genomic medicine can transform diagnosis and treatment, particularly in populations with high rates of inherited disorders. This commentary describes Genomic Medicine Center of Excellence launched to strengthen Saudi genomic infrastructure and highlight lessons for underrepresented populations.

Tidyverse blog on dplyr 1.2.0: dplyr::if_else() and dplyr::case_when() are up to 30x faster in the new dplyr 1.2.0 release.

R Weekly 2026-W07: sitrep, webRios, Jarl linter.

Athanasia Mo Mowinckel: Why Every R Package Wrapping External Tools Needs a sitrep() Function.

HBR: AI Doesn’t Reduce Work—It Intensifies It. See also, Simon Willison’s reaction.

“I’m frequently finding myself with work on two or three projects running parallel. I can get so much done, but after just an hour or two my mental energy for the day feels almost entirely depleted. […] The HBR piece calls for organizations to build an “AI practice” that structures how AI is used to help avoid burnout and counter effects that “make it harder for organizations to distinguish genuine productivity gains from unsustainable intensity”.

I think we’ve just disrupted decades of existing intuition about sustainable working practices. It’s going to take a while and some discipline to find a good new balance.

Abhishaike Mahajan at Owl Posting: Heuristics for lab robotics, and where its future may go: three ideologies of lab robotics progress, why they may all converge on the same business model, whether any of it will be actually helpful for the problems that plague drug discovery the most, and more.

New papers & preprints:

Without safeguards, AI-Biology integration risks accelerating future pandemics

Navigating ethical, legal and social implications in genomic newborn screening

PRIZM: Combining Low-N Data and Zero-shot Models to Design Enhanced Protein Variants

A toolkit for programmable transcriptional engineering across eukaryotic kingdoms