Ollama now has a GUI

Ollama's new app has a built-in graphical user interface. It has a model selector, you can drop in files, and supports multimodal and vision models like Gemma3 and reasoning models like DeepSeek-R1.

I’ve written a lot about Ollama here. Ollama lets you run open-weight models like Llama, Gemma, Mistral, Qwen, DeepSeek, etc. on your own computer. You don’t have to pay for a frontier model like ChatGPT, Claude, or Gemini, and all the inputs and outputs stay on your computer, minimizing any privacy and security concerns.

Until recently Ollama was a command-line only tool. A few months ago I wrote about a few different user interfaces you can run in front of Ollama that give you a familiar ChatGPT-like feel.

Last week Ollama released a new app for Mac and Windows now with a built-in UI.

Demonstration

Let’s take it for a spin. My 16GB MacBook Air isn’t powerful enough to run OpenAI’s new open-weight gpt-oss model, so I’ll be using gemma3:4b and deepseek-r1:8b.

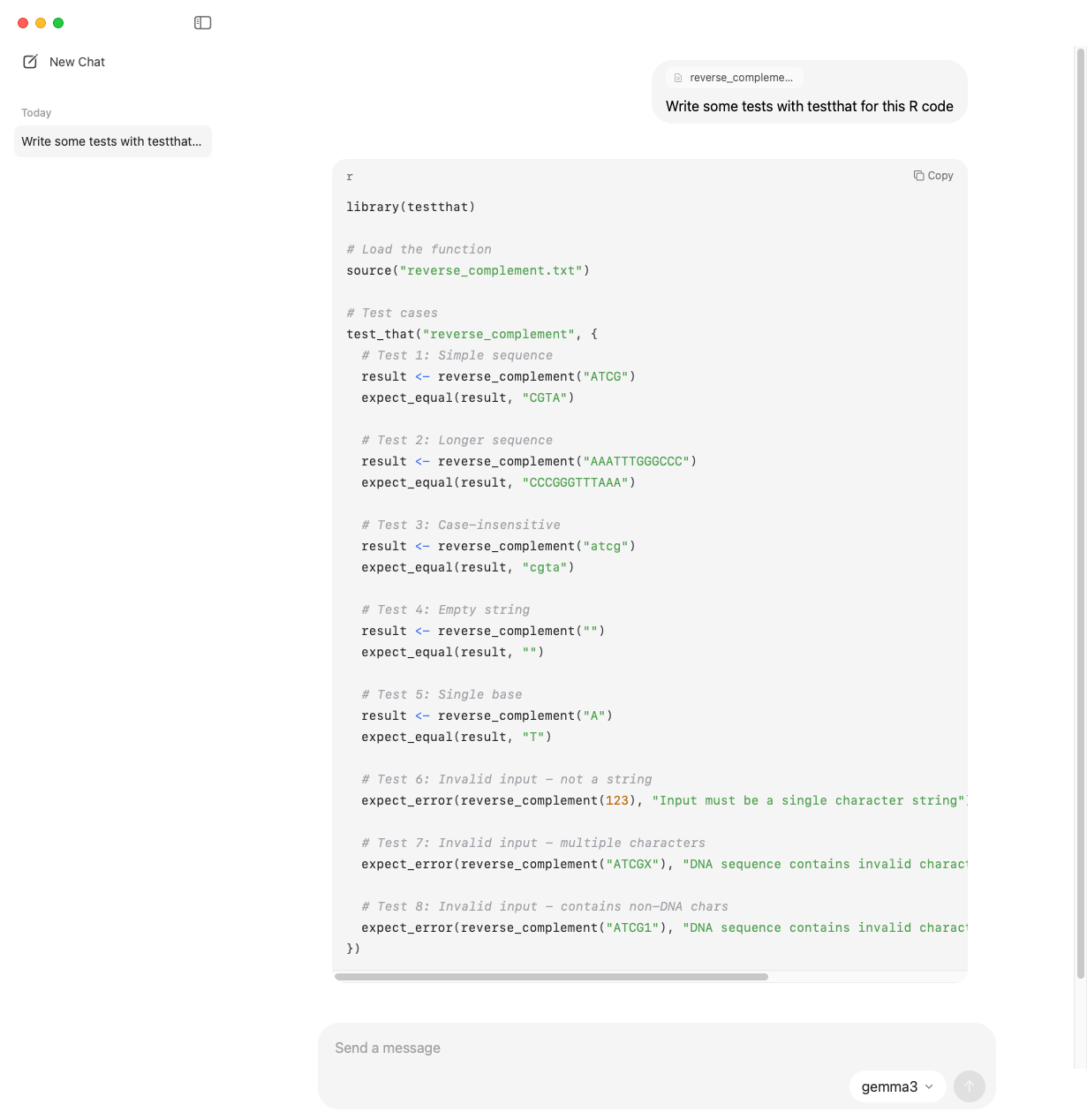

Writing tests for R code from a file

You can drag and drop a file into the chat window and ask whichever model you’re going to do something with the file. Here I’m dropping in this simple R file with a function to reverse complement a DNA sequence, and I’m asking for tests with testthat. I’m using Gemma3.

Image analysis

Gemma3 is a vision model, so we should be able to upload an image and ask about it. I uploaded this photo I took yesterday on a bike ride through Shenandoah National Park, and asked Gemma3 to summarize the image.

Here’s the result.

Reasoning models

Next I wanted to try out one of the reasoning models. I’m using the DeepSeek-R1:8b model, running on my MacBook Air, and I’m asking the following question:

You have sequenced the genome of a mammal that has never been sequenced before, and identified thousands of structural variants (SVs) relative to the closest reference genome. Many are in intergenic regions, but some overlap coding and regulatory regions. How would you prioritize which SVs to experimentally validate and characterize, and what tools or data would you use?

Result

Here’s what the GUI looks like when it’s thinking and later responding:

Here’s the full response:

Okay, validating thousands of structural variants (SVs) discovered in a novel mammal genome is a significant challenge due to the high cost and technical demands of experimental methods. A systematic prioritization strategy based on bioinformatics evidence is crucial before heading to the lab.

Here's how you would approach this:

Phase 1: Prioritization Using Bioinformatics Data

The goal here is to identify SVs that are most likely biologically relevant, reducing the number of false positives and focusing resources.

Core Datasets & Analyses (Starting Point)

Variant Call Format (VCFF) Files: The primary output from your variant calling pipeline for SVs.

Reference Genome Alignment: Detailed alignment files (like BAM or CRAM) showing how the query genome maps against the reference, essential for accurate breakpoint identification and filtering potential artifacts.

Breakpoint Coordinates & Orientations: For each SV call, precise start/end points in both the query sequence and the reference are needed.

Filtering Strategy

You need to filter out low-confidence calls and focus on those most likely to be true and impactful.

Filter by Mapping Quality:

Exclude variants where key bases (especially at breakpoints) have very low mapping quality scores or base quality scores.

Tools like

bcftools(bcftools filter -e 'QUAL < X') can use the variant's overall confidence score.Filter by Read Support:

Ensure SVs are supported by sufficient numbers of reads, especially discordant pairs or split-read mappings.

Higher minimum mapping quality for supporting reads is ideal (e.g., >30).

Check read depth across the variant region; extremely low coverage can be suspicious.

Prioritization Criteria

Now, rank the remaining SVs based on potential biological significance and confidence:

Conservation:

Highest Priority: Focus on variants occurring in orthologous regions between your novel mammal and other related genomes (if available). This suggests shared evolutionary history or functional importance.

Tools:

BLAT/BLAST searches against highly conserved species databases to find homology.

UCSC Genome Browser's "Conservation" track (

phastConsscores,slidingWindowConservationtrack).Ensembl Compara (if other genomes are in the ENSEMBL pipeline).

Overlap with Known Functional Elements:

Prioritize SVs overlapping gene regions or regulatory elements identified in other mammals.

Check for:

Coding Variants: SNPs, indels within exons, complete gene deletions/fusions (gene disruption analysis).

Regulatory Variants: Intragenic variants affecting splice sites, promoter or enhancer disruptions/insertions (based on annotations like those from ENCODE or Roadmap Epigenomics), potentially altering transcription factor binding site accessibility or creation of new elements.

Tools:

ANNOVAR (

annotate_variation.pl) - uses gene and regulatory databases (Ensembl, RefSeq, UCSC).SnpEff/SnpVault configured for SVs.

bedtools intersectwith BED files defining coding regions or predicted regulatory elements from ENSEMBL/Gencode/other sources.Variant Density & Novelty:

Areas with a high density of SVs (relative to the reference) might indicate structural instability or potentially functional hotspots.

Conversely, singletons in otherwise highly conserved regions are riskier but shouldn't be dismissed entirely – they could represent real differences. However, if you have independent evidence from other methods (like synteny breaks), they become higher priority.

Size Distribution:

Analyze the size distribution of your SVs.

Prioritize variants that fall within typical sizes for SVs in mammalian genomes or align with a significant breakpoint cluster near these sizes, rather than extremely large (megabases) or small (<10bp for deletions, though context matters). Extremely large SVs often require specialized validation techniques.

Breakpoint Refinement & Genomic Context:

Use high-resolution structural variation callers that pinpoint breakpoints accurately.

Look at the genomic context of the breakpoint:

Is it within a gene or critical regulatory sequence? (Already covered in step 2)

Are there other SVs nearby (nested, clustered)? This might suggest complex rearrangement regions. Be cautious as clustering can sometimes be artifactual.

Check for potential homology to distant genomic locations – this hints at possible translocation breakpoints.

Functional Consequences Assessment:

Even if a variant falls within a known gene or regulatory element, assess its specific effect:

Frame shift/stop gain/loss mutations in coding regions.

Variants predicted to be deleterious (e.g., loss-of-function predictions).

Disruption of strong putative enhancers/promoters.

Data Sources for Prioritization

Gene Annotations: Ensembl, Gencode, RefSeq (for gene boundaries and function).

Regulatory Element Predictions: ENCODE, Roadmap Epigenomics, UCSC Genome Browser tracks.

Conservation Tracks: From UCSC or ENSEMBL data portals.

Orthology Information: NCBI HomoloGene, Ensembl Compara.

Phase 2: Experimental Validation & Characterization

Once prioritized (e.g., a manageable set of perhaps hundreds), you design experiments:

PCR and Sanger Sequencing / Pyrosequencing:

Best for precise breakpoint confirmation in smaller SVs (<~5kb).

Design specific primers flanking the predicted breakpoints.

Run PCR on DNA extracted from multiple individuals (if possible) to confirm variant presence/absence. Sanger sequencing checks the exact sequence change.

PCR and Capillary Electrophoresis/Iontorrent Sequencing:

For variants where read length might not span the entire SV but breakpoint confirmation is needed.

Design primers that amplify across the breakpoint or include it in a longer amplicon suitable for short-read sequencing.

Long-Read Sequencing (e.g., PacBio, Oxford Nanopore):

Excellent for de novo detection and phasing of larger SVs (>5kb) and complex variants involving multiple breaks.

Directly sequences the region, confirming structure without needing separate breakpoint assays.

Requires targeted sequencing or whole-genome long-read.

Array-Based Methods:

Custom CNA Arrays (e.g., Agilent CGH430k): Can confirm copy number changes across a genome but poor for defining breakpoints and smaller SVs (<5kb).

SNP Arrays: Primarily for CNVs, less so for other types like inversions or complex rearrangements.

Characterization Techniques (After Validation)

PCR & Sanger Sequencing: Confirm exact sequence change at the breakpoint.

Quantitative PCR (qPCR): Estimate relative dosage (copy number) – e.g., deletions, duplications, amplifications. Can be used on a panel of variants or specific breakpoints.

Western Blotting / qRT-PCR for Gene Expression: Validate predictions about coding potential changes due to SVs affecting transcription start sites or splice sites.

Functional Assays:

Cell Culture Models (eKnockout): Introduce the validated SV into cells and measure functional consequences. This can be tricky in a novel species.

RNAseq: Sequence transcriptomes from individuals with and without the variant to detect aberrant splicing or gene expression changes predicted by the SV.

ChIP-seq (if relevant): Check if an SV disrupts binding of key transcription factors, affecting epigenetic marks or enhancer activity. Might require cell/tissue types where the element is active.

Summary & Workflow

Perform initial filtering on the raw SV calls based on mapping quality and read support.

Prioritize variants using:

Conservation checks against related genomes.

Overlap with high-confidence functional elements (genes, promoters, enhancers).

Variant density within regions. (Optional) Analyze size distribution to guide expectations.

Select a subset of the top-priority SVs for experimental validation.

Validate using targeted PCR/Sanger or long-read sequencing methods.

Characterize validated variants using qPCR, expression analysis (qRT-PCR/RNAseq), Western blotting, functional assays in cell culture, and potentially advanced techniques like CATCH-ASE or Hi-C if applicable.

This multi-step approach leverages comparative genomics data effectively to prioritize findings from a novel genome sequencing project.

Thinking transcript

One of my favorite things about using the DeepSeek-R1 reasoning model (locally, never through the deepseek.com service!) is watching the “thinking” that happens before you get a response. Here’s the full “thinking” transcript.

Okay, user is asking about prioritizing structural variant validation in a novel mammal genome project. This is clearly someone with bioinformatics expertise working on comparative genomics - probably a grad student or researcher in evolutionary biology/genetics field.

Hmm, they've already sequenced the genome and found thousands of SVs relative to reference, so they're at the experimental validation stage. The key challenge here is distinguishing biologically significant variants from sequencing artifacts. User specifically mentions both intergenic and functional region variants need consideration, showing good attention to detail.

Let me break this down systematically first: We have two phases - prioritization before wet lab work (which saves resources) and then validation methods themselves. For a new species, we'd want to be extra cautious about false positives since the reference comparison might introduce noise from assembly differences.

The intergenic variants are trickier because their impact isn't immediately clear unless they're near genes or have other features. I should emphasize conservation checks first - those will filter out most artifacts. Then look at variant density and size distribution, but be careful not to bias toward rare large variants if that's unusual for the species.

For coding regions, we can use more aggressive filtering because there are clearer functional consequences. But regulatory regions require special attention - enhancers, promoters etc. might have subtle effects. The user should consider both classical gene disruption methods and newer techniques like CATCH-ASE or Hi-C for 3D structure impacts.

They didn't specify if they want to validate all SVs at once or focus on certain types first. I'll assume they're looking for a comprehensive approach but with some prioritization strategy. The answer should balance thoroughness with practicality - nobody has the resources to validate every variant in this hypothetical genome.

Need to mention that phenotype data would be ideal if available, since it directly guides which variants might cause disease or interesting traits. But many de novo mammal genomes don't have complete phenotypic information yet. So I'll include methods for when there is no phenotype data.

The technical challenges here are significant - long SVs (>1kb) in a novel species would be best validated with PCR and Sanger sequencing, while complex variants might need more advanced techniques like nanopore or optical mapping. User should consider their lab capabilities too since they're asking about validation methods.

This seems like a thoughtful researcher who understands the importance of prioritization. They're probably drowning in data and looking for an unbiased way to select meaningful targets. I'll structure this as a decision tree with multiple filtering tiers so it's easy to follow execution-wise.

Would be nice to put that Structural Variant challenge to GPT-5 and compare. Do you have access to GPT-5 yet?