GUIs for Local LLMs with RAG

Running local LLMs with Ollama, LMStudio, Open WebUI, and others for private and secure chat, vibe coding, image analysis, and "chat with your documents" using RAG

Anyone reading this newsletter has surely used the frontier models like ChatGPT, Claude, and Gemini. I’ve written a few posts about using local models but haven’t really talked much about the tools I use to directly interact with these models. Those previous posts interact with local models using tools like ellmer in R or my own biorecap package which interacts with a locally running Ollama server.

But what is Ollama, and how do you use it to interact directly with a local LLM? What about other user interfaces running locally that provide a “nicer” graphical user interface like you’re used to with the commercial models? What about enabling other applications like RAG with a local model? Let’s delve deeper.

Ollama

Local models are important for users who prioritize privacy, cost savings, and security. Unlike cloud-based AI services, local models run entirely on your machine (or a cloud VM you manage), ensuring that sensitive data never leaves your device while also eliminating API fees and usage limits. These are a few primary considerations making them ideal for offline use, proprietary data handling, and scenarios where latency or reliability is critical.

Ollama is a lightweight framework designed to run LLMs locally with minimal setup. It streamlines the process of downloading, running, and interacting with models like Llama, Mistral, and Gemma, providing a simple API for integration into various applications. With Ollama you can access powerful AI capabilities on your own hardware, whether for chat interfaces, programming assistance, or local knowledge retrieval.

In those previous posts linked above I’m using other tools like ellmer and biorecap to interact with a local model like Llama3.3 via the Ollama server running locally. But I could just as easily chat with a local model directly.

Download the installer at ollama.ai, fire up your terminal, and download one of the available models. I’m writing this on an airplane so I downloaded the very small Gemma3-4b model on the airport wifi before boarding, the model released by Google a few days ago. It’s small (easily fits on my tiny Macbook Air), fast, and has a huge context window. First pull the model, then run an interactive chat session.

# Download the model

ollama pull gemma3

# Run the model with an interactive chat session

ollama run gemma3Vibe coding

OpenAI co-founder Andrej Karpathy once remarked that “the hottest new programming language is English” and recently expanded on the idea of “vibe coding” — the idea that the AI tools for writing code are so good you can “just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.”

Let’s try a little vibe coding. Let’s make a simple web app that fits in a single static HTML page that’ll take a DNA sequence and calculate the GC content. It should reject any entries that aren’t A, C, G, or T, and it should have a button that gives you an example sequence if you want it. Here’s the prompt I used:

Write an HTML file with embedded JavaScript that (1) Takes a DNA sequence as input, (2) calculates the GC content percentage, displaying the result dynamically as the user types. Include basic input validation to ensure only A, T, C, and G characters are allowed. Give me a button to insert an example sequence ("GATTACA") into the box. If the example sequence button is clicked, the app should display the GC content of the example sequence without any further interaction. Because the GC content updates dynamically there's no need for a "calculate" button to start the calculation.

Here’s what Ollama looks like generating the response with Gemma3-4b.

You can try out the app here. The sequence you enter stays entirely in your browser.

Here’s what it looks like (I copied in the ~3.7kb SARS-CoV-2 Spike gene sequence).

The Ollama CLI works fine for most tasks, but it’s not as pretty as the UI you’re used to with tools like ChatGPT. We’ll revisit this later. One of the nice things about a CLI is that you can pipe stuff into and out of it. For example, I can echo a prompt, pipe it to ollama, then pipe it to the pbcopy command (on MacOS this will put the stdout on your clipboard).

echo "python code to reverse complement a DNA sequence" \

| ollama run gemma3 \

| pbcopyOllama with vision models

Gemma3 can analyze images, and the easiest way to do this with Ollama is to put your image in a file, and reference that file path in your prompt.

My great grandfather had a legendary tomato garden, and generations later I inherited his green thumb and love for tomatoes (which both inspired this tattoo I got last month). Here’s a photo taken many years ago of him showing me tomatoes from his garden.

Let’s see what Gemma3 thinks of this image. Instead of dropping into an interactive prompt session I’ll just run this on a command line through a pipe.

echo 'describe this image: ./grandpa.jpg' | ollama run gemma3Here’s the response:

Overall Impression:

The image is a warm, nostalgic-looking photograph, likely taken outdoors. It evokes a sense of connection and intergenerational love.

Main Subjects:

Elderly Man: A distinguished-looking older man is the primary focus. He's wearing a light beige or cream-colored short-sleeved shirt and a light beige or cream-colored hat with a red and white striped band. He has a kind expression, with a slight smile and a thoughtful gaze. His skin shows signs of aging, with wrinkles and age spots.

Baby: A very young baby, with a bald head, is sitting in the man's lap. The baby is looking up at the man with a slightly bewildered expression.

Setting and Props:

Tomato: The baby is holding a large, ripe tomato. This suggests a summer harvest.

Background: The background is blurred, but it appears to be a lush green area, possibly a garden or park. The foliage is dense, creating a soft, out-of-focus backdrop.

Mood and Tone: The image has a gentle, heartwarming feel. It suggests a moment of connection between a grandfather and his grandchild, possibly sharing a simple pleasure like a tomato. The colors are warm and inviting, contributing to the overall sense of nostalgia.

Technical Aspects: The photograph has a slightly grainy texture, which is typical of older photographs. The lighting is soft and natural.

Open WebUI: a GUI for Ollama

The Ollama CLI works well, but it’s not pretty. You get raw markdown instead of rendered rich text. Code blocks aren’t nicely formatted. You don’t have easy copy / retry buttons. This is where a nice UI wrapping one of the open models can be helpful.

I tried out Open WebUI after reading Simon Willison’s post on it. It’s on GitHub, freely available and permissively licensed (BSD), and the documentation is great. The GitHub readme provides installation instructions via pip, but I used uv as described in my previous post on running tools with uv.

This one-liner will install all the dependencies and runs open-webui serve with a Python 3.11 interpreter, and takes only a few seconds to do so. There’s also a Docker container and the long docker run command in the docs also worked for me. Either way, this connects to a local model by connecting to the Ollama server running on your machine. That is, you have to have Ollama installed and running as described above for Open WebUI to connect to.

uvx --python 3.11 open-webui serveLet’s try the same vibe coding exercise as before. Essentially the same response, but you now get those nice-to-haves I mentioned earlier (rendering, clipboard copy buttons, etc.). Another thing you’ll see a few seconds in is that Open WebUI opens a pane to the right of the generated code where it will render the HTML web page produced, much like Claude Artifacts.

What about the image analysis? Much like you can do with ChatGPT just by pasting in an image you can do the same with Open WebUI as long as you’re using a model like Gemma3 that has such capabilities.

After pasting in the image and asking for a description, I get something very similar (we’re using the same model but remember these are stochastic — you’ll get a different response every time).

RAG with LM Studio

Retrieval-augmented generation (RAG) is a technique that enhances LLMs by dynamically retrieving relevant external data to improve their responses. Instead of relying solely on pre-trained knowledge, a RAG system searches documents, databases, or vector stores in real time, integrating retrieved information into the model's output for more accurate, context-aware, and up-to-date answers.

In other words, RAG augments the generated responses by retrieving relevant information from your documents. I.e., RAG lets you “chat with your documents.”

I won’t get into embedding models and vector databases here — I’ll leave that as an exercise to the reader (fire up Ollama and ask Gemma3 or Llama3.3 to explain RAG to you).

You can do RAG with Open WebUI but if you have PDF documents you’ll have to do a little bit of work upstream like converting those into markdown and embedding those into a knowledge base (all well-described in the docs).

Instead of Open WebUI here I’m using a different tool called LM Studio. It’s free (but not open source) at https://lmstudio.ai/. Instead of interacting with Ollama like Open WebUI, LM Studio uses llama.cpp directly loading and running GGUF files from HuggingFace. Which means when you fire up LM Studio you’ll have to download a model again instead of just relying on Ollama’s API.

With LM Studio you can simply paste in one or more PDF documents into a chat session and LM Studio will automatically embed these documents and enable RAG for you with your chosen model.

Let’s see what a query looks like without and with RAG.

Without RAG

Vanity got the best of me. Let’s ask Gemma3 something that it might not know about: “Summarize Stephen Turner’s research career in 10 bullet points.” The response is relying on Gemma3’s training data, which must include some other Stephen Turner who is a scholar of religion, specifically the Christian Gospels (not me!).

With RAG

Now let’s use the same prompt again, but this time I’ll copy and paste in my full academic CV. Now it’s still using the Gemma3 model but this time augmenting the generated responses by retrieving relevant information from the document I uploaded (I redacted grant and contract numbers). This is what I was going for.

Other tools

Ollama, Open WebUI, and LM Studio aren’t the only tools that give you a UI to enable your LLM + RAG setup. Here are a few others I’m trying out.

GPT4All

GPT4All (https://www.nomic.ai/gpt4all) is another free-not-open-source option that looks a lot like the tools above, with good documentation on privately accessing local documents.

Sidekick

Sidekick (https://github.com/johnbean393/Sidekick) is particularly exciting. It’s free, open-source and permissively licensed (MIT). Sidekick lets you “chat with a local LLM that can respond with information from your files, folders and websites on your Mac without installing any other software. All conversations happen offline, and your data stays secure.”

From the README: Let’s say you're collecting evidence for a History paper about interactions between Aztecs and Spanish troops, and you’re looking for text about whether the Aztecs used captured Spanish weapons. Here, you can ask Sidekick, “Did the Aztecs use captured Spanish weapons?”, and it responds with direct quotes with page numbers and a brief analysis. Here is the response:

And here’s the highlighted quotes from the documents you uploaded.

AnythingLLM

AnythingLLM (https://anythingllm.com/) is another application that looks a lot like the tools above. It’s also on GitHub, free and open-source, permissively licensed (MIT), available at https://github.com/Mintplex-Labs/anything-llm. Here’s a short overview video that demonstrates its RAG capabilities.

Msty

Msty (https://msty.app/) also looks particularly exciting, and the small but growing AI Bluesky community seems bullish on it as well. Msty lets you use both local and online AI models with what looks like a very simple interface.

Here’s an example demonstrating side-by-side model comparisons (LLaVa versus GPT-4o) for image analysis.

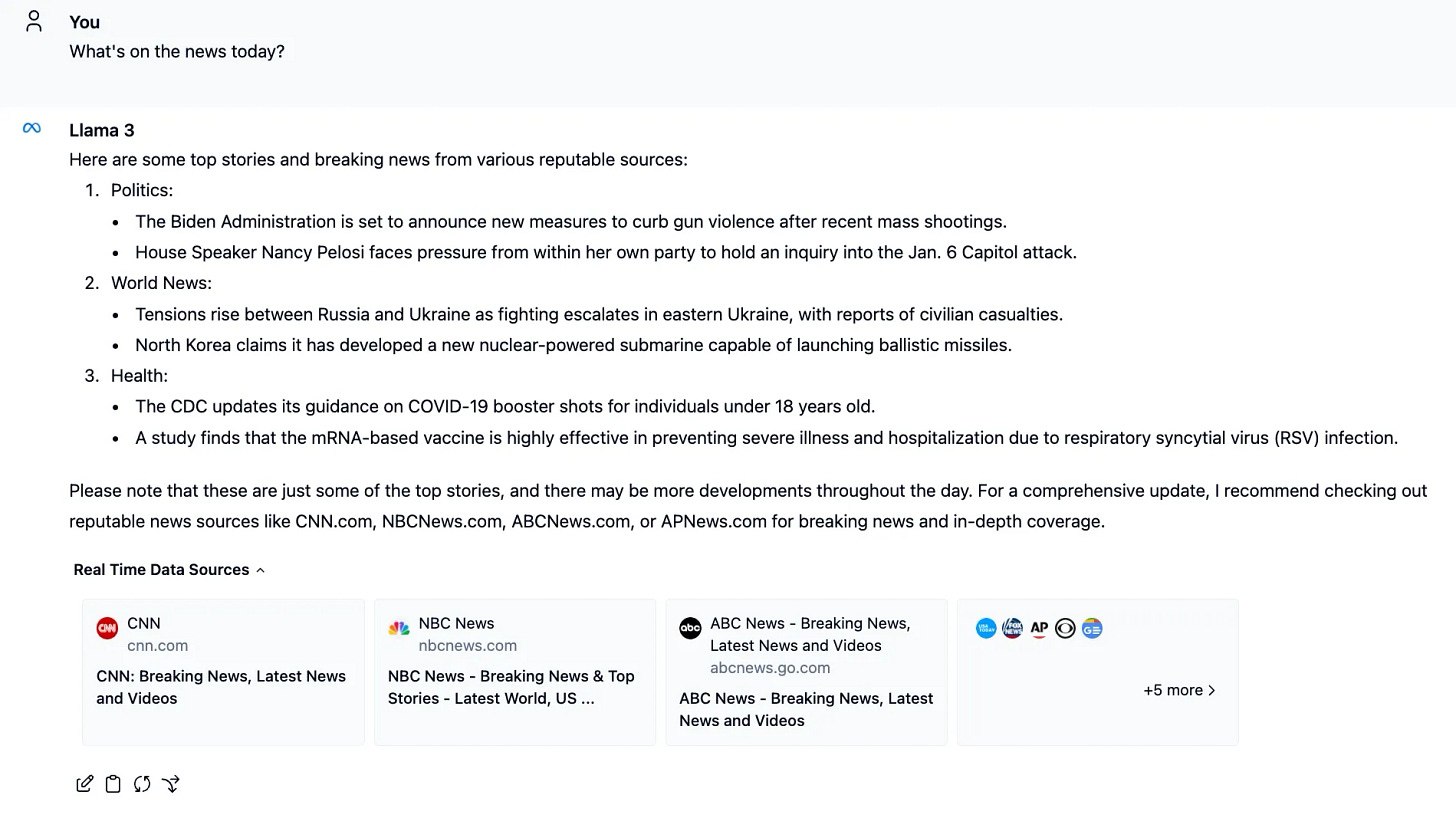

Msty can also do web search. Here’s an example asking Llama3 about today’s news.

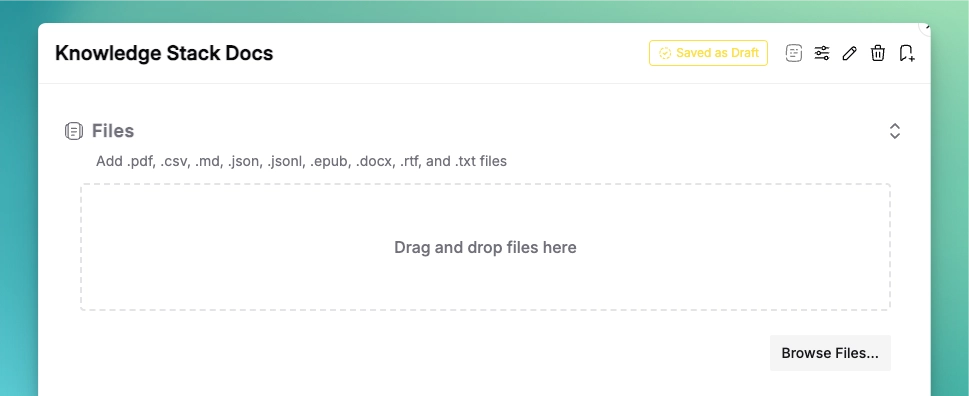

Msty also enables RAG by letting you upload documents to your knowledge stack:

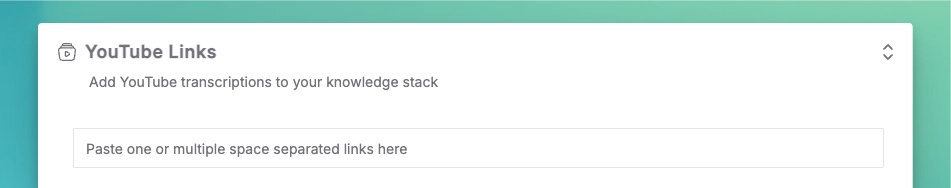

Besides just uploading files, you can also upload entire folders, write your own text notes, and paste in YouTube links, which automatically fetches transcripts and includes video metadata in the RAG responses.

Related posts

Here are a few previous posts on using local LLMs. You can view them all under the AI tag in the nav bar at the top.