Weekly recap (Sep 19, 2025)

AI-generated genomes, R news for my posit::conf() FOMO, biosecurity, mirror life, teaching and AI, ELSI for AI, in defense of CRAN, Anthropic Economy Index, OpenAI report on how people use ChatGPT

Happy Friday, colleagues. Lots going on this week. Once again I’m going through my long list of idle browser tabs trying to catch up where I can. ElevenReader (no affiliation) has been helpful to catch up this week!

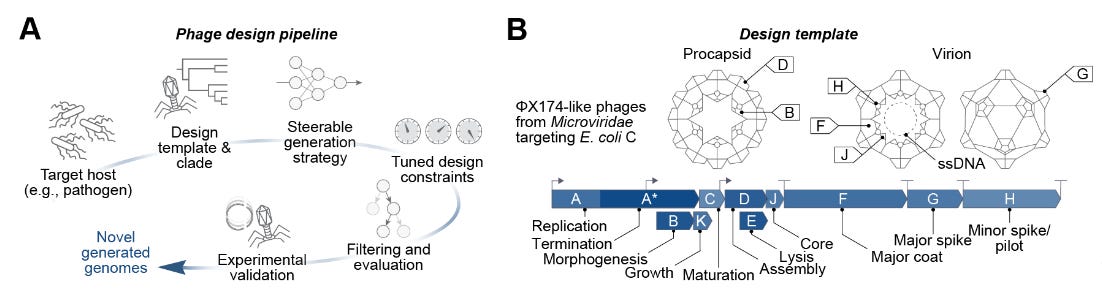

The Arc Institute reported the first ever viable genomes with genome language models. Read the preprint: Generative design of novel bacteriophages with genome language models and their blog post, How We Built the First AI-Generated Genomes. And as always, Niko McCarty at Asimov Press has a really good article about Arc’s achievement: AI-Designed Phages.

The Posit conference is happening this week and I’m having some real FOMO. In the meantime, I’m reading RWeekly 2025-W38 (R6 Interfaces, ggplot2) and this week’s issue of Data Scientist with R.

Slides from Julia Silge’s talk at posit::conf(2025): How I got unstuck with Python.

Stack Overflow AI: https://stackoverflow.ai/.

NIH kicks off yearlong effort to modernize biosafety policies. See also the announcement from NIH director Jay Bhattacharya: NIH Launches Initiative to Modernize and Strengthen Biosafety Oversight.

Speaking of biosafety, Nature published an opinion and news piece this week on meetings in Manchester this week and later at a National Academies meeting discussing research limitations on mirror life. See also Alexander Titus’s essay, Shock Doctrine in the Life Sciences - When Fear Overwhelms Facts. The Nature pieces:

How should ‘mirror life’ research be restricted? Debate heats up.

Mirror of the unknown: should research on mirror-image molecular biology be stopped?

Earlier this week I wrote a little more about these two commentaries and the upcoming meetings.

Tangentially related, a new paper in Human Genetics: Regulating genome language models: navigating policy challenges at the intersection of AI and genetics.

Posit Blog: 2025-09-12 AI Newsletter. Anthropic copyright settlement, agentic browsers, Posit news. Sign up for the newsletter here.

All pretenses dropped. Google quietly added a built-in homework helper feature to Chrome that automatically shows up in Canvas assignments. This Washington Post article from yesterday says that Google rolled back the feature after outcry from teachers, but it’s still showing up for me.

Claus Wilke: Teaching data visualization in the time of generative AI. Recognizing that AI can’t be banned or ignored, AI detectors don’t work, and that in-class oral / handwritten exams don’t work for this kind of thing, Claus will be taking an approach similar to NeurIPS’s LLM policy and require students to reflect on their AI use and how they’re engaging with AI. And those assignments will be peer-graded so as to discourage students from admitting to each other that they did the entire assignment with ChatGPT.

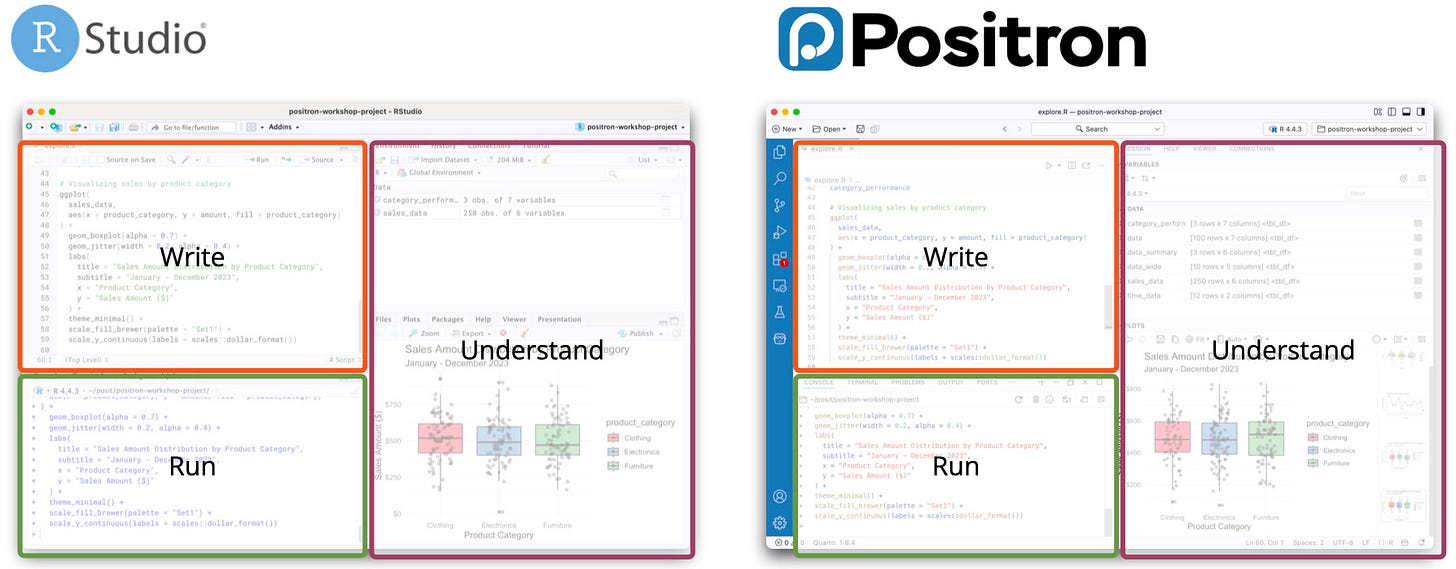

Positron docs: Migrating from RStudio to Positron.

Alondra Nelson: An ELSI for AI.

Julie Tibshirani — If all the world were a monorepo: The R ecosystem and the case for extreme empathy in software maintenance.

Point, counterpoint. Colossal: Are the Colossal dire wolves real? C.f., commentary paper: The dire wolf (Aenocyon dirus) resurrection that wasn’t. Some good points here, but I think some of the really cool science that was done as part of this project was downplayed. A quote straight from the paper: “if the deactivation of the LCORL gene is sufficient to increase body size in gray wolves—and in a manner that mimics the genetic lesion of the dire wolf LCORL gene—we have learned something extraordinary about the genetic basis of adaptation in an extinct animal.” This is a remarkable insight into an extinct species, no matter the position of the rest of the paper.

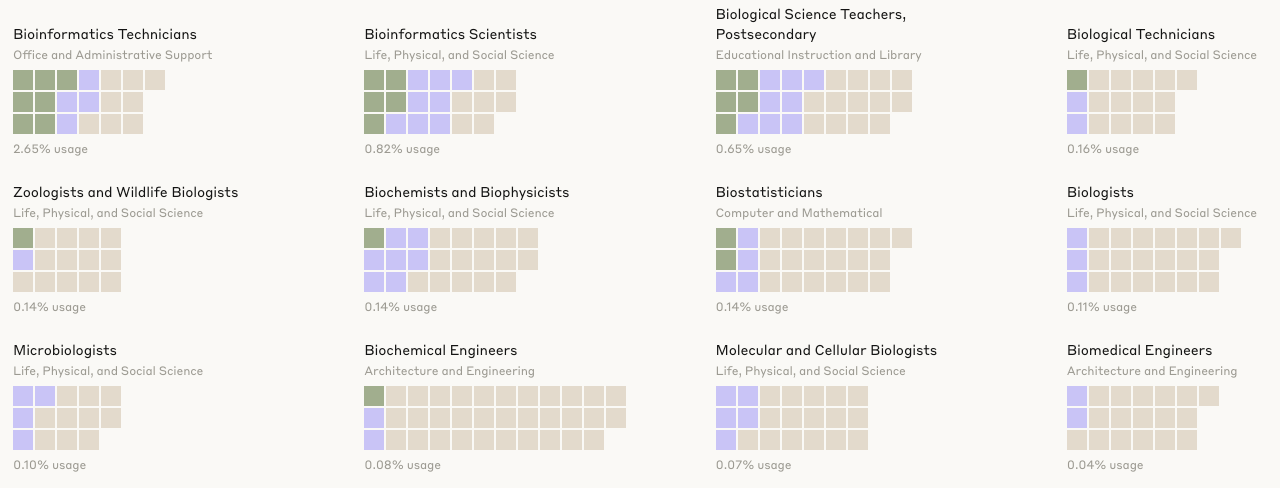

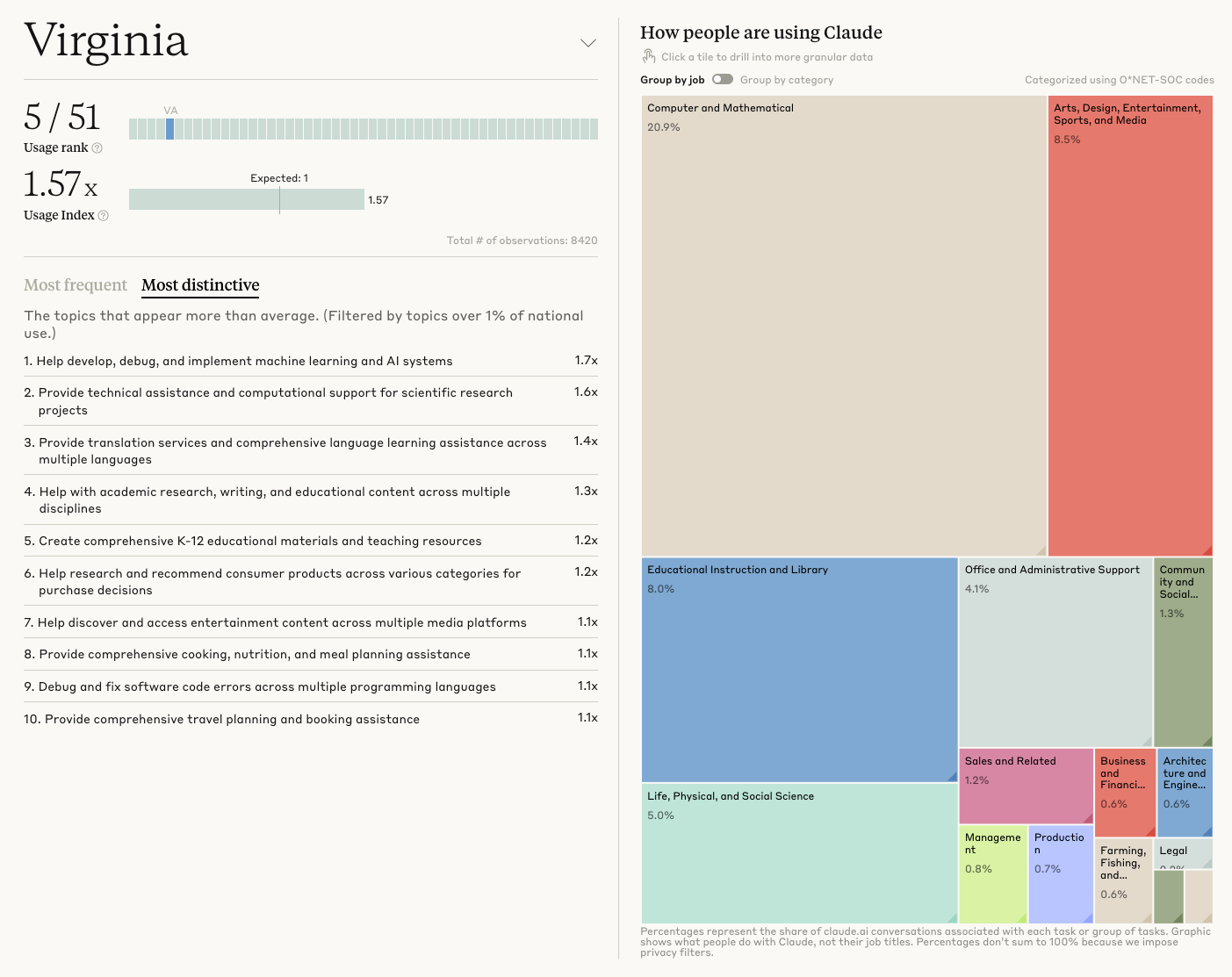

Anthropic Economic Index: Understanding AI’s effects on the economy. That link takes you to the interactive web app. See also the report.

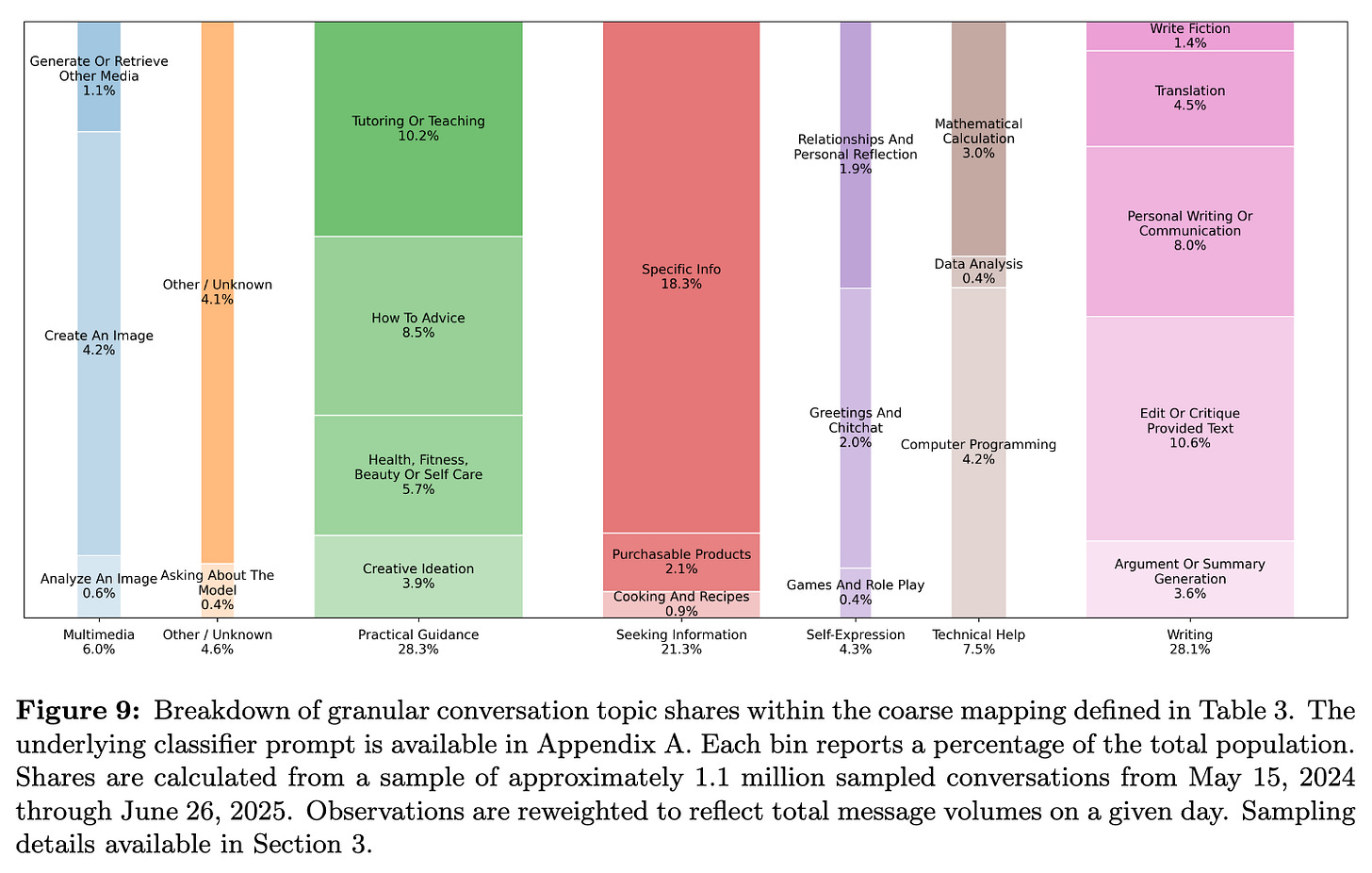

NBER paper from OpenAI: How People Use ChatGPT (pdf). OpenAI examines >1 million chats and provides detailed breakdown on how people are using ChatGPT. Practical guidance (tutoring, how-to, what to do about this weird rash) and writing (editing, critiquing, doing your English homework) dominate.

It’s a bit of a reminder that I (we?) live in a bubble, seeing that programming and data analysis combined sum up to 4.6% overall use.

GPT-5-Codex, and its system prompt.

How OpenAI uses Codex (PDF).

DeepSeek-R1 paper was published in Nature this week: DeepSeek-R1 incentivizes reasoning in LLMs through reinforcement learning.

$XBI is doing okay (below), and yet: Boston biotech startups, jobs, and science are being swept away.

Parul Pandey: My Experiments with NotebookLM for Teaching.

Renee Hoch, Head of Publication Ethics at PLoS: The promise and perils of AI use in peer review. I have my own misgivings on this topic, but the author makes some good points throughout.

AI can help improve the consistency with which journals enforce their standards and policies. For example, AI can detect and produce review reports querying issues such as incomplete, unverifiable, or retracted references, problematic statistical analyses, and non-adherence to data availability and pre-registration requirements. Human reviewers are inconsistent in the degree to which they address these types of issues which can directly impact integrity and reproducibility. […] Moving toward a hybrid human+AI peer review model could mitigate known pain points in peer review, including the heavy burden peer review places on academics and longer-than-ideal peer review timings. If AI covers technical aspects of the assessment, then perhaps we can use fewer reviewers to cover aspects of peer review that require uniquely human executive functioning capabilities.

alphaXiv webinar with Cornelius Wolff: How well do LLMs reason over tabular data?