Weekly Recap (December 12, 2025)

GPT-5.2, DARPA Generative Optogenetics, don't use local models for coding agents, red teaming, sandbagging, AI in science & education, MCP, R updates (R Data Scientist, R Weekly, R Works), new papers

GPT-5.2 was released yesterday. Gemini 3’s release put OpenAI into a “code red” earlier this month. OpenAI calls this “the most capable model series yet for professional knowledge work.” Looks like most of the benchmarks are self-report, so it’ll be interesting to see where 5.2 lands in various arenas and external benchmarking. I thought the more interesting notes from the announcement were the examples illustrating how GPT-5.2 can create spreadsheets for things like workforce planning models (headcount, hiring plans, attrition, budget impact), cap tables (Seed, Series A, and Series B liquidation preferences, equity payout calculations), and detailed slide decks. See also the GPT-5.2 system card, which has an updated biosecurity section:

We are treating this launch as High capability in the Biological and Chemical domain, activating the associated Preparedness safeguards. We do not have definitive evidence that these models could meaningfully help a novice to create severe biological harm, our defined threshold for High capability, and these models remain on the cusp of being able to reach this capability. Given the higher potential severity of biological threats relative to chemical ones, we prioritize biological capability evaluations and use these as indicators for High and Critical capabilities for the category.

DARPA BTO launches the Generative Optogenetics program.

Synthetic DNA and RNA molecules are foundational to a host of next-generation technologies crucial for national security and global well-being, impacting everything from resilient supply chains and advanced materials manufacturing to sustainable agriculture and human health. However, current methods for creating these molecules de novo face significant limitations in terms of scale, complexity, and environmental impact. The Generative Optogenetics (GO) program seeks to overcome these challenges by pioneering a revolutionary approach: Harnessing the power of light to direct the synthesis of DNA and RNA directly within living cells.

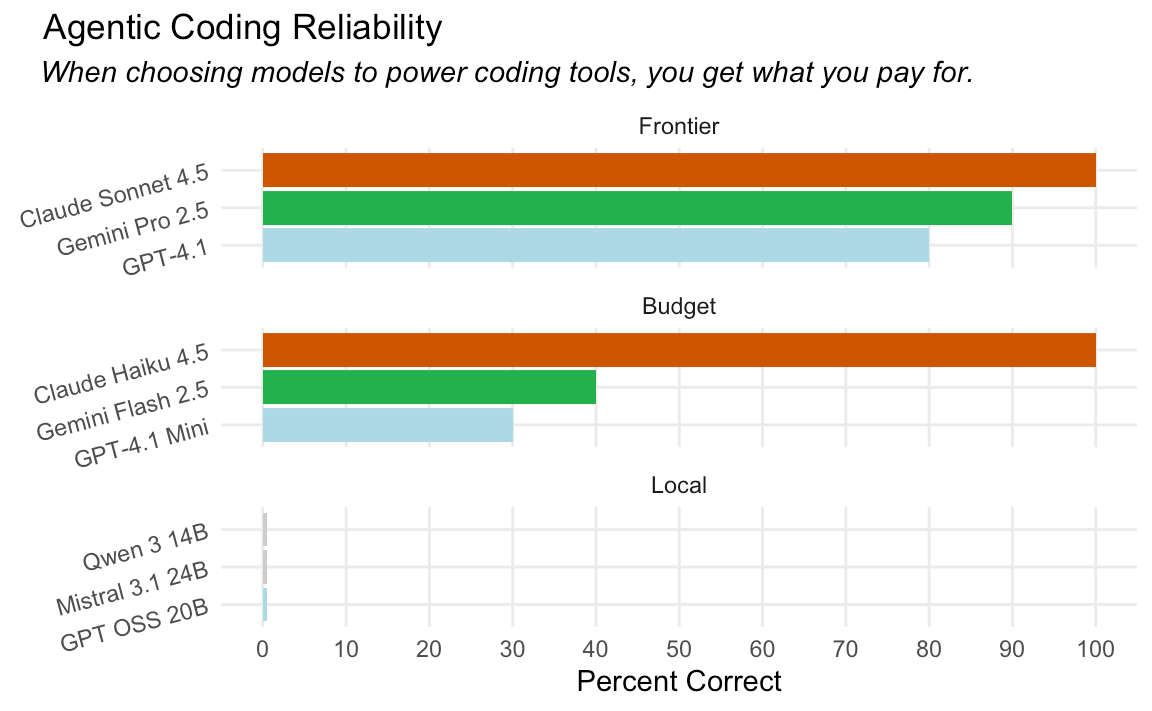

Simon Couch: Local models are not there (yet). LLMs that can run on your laptop are not yet capable enough to drive coding agents. This aligns with my own dabbling with local models (e.g.). I recently gave a talk at our local R User Group demonstrating Positron Assistant, Databot, and Codex. I led with a general recommendation: just put $5 on your Anthropic API key and don’t bother with local models, unless you absolutely have to for security reasons (and note that the major frontier model providers don’t train on your inputs or outputs when using the API).

Anthropic: Claude for Nonprofits.

The R Data Scientist 2025-12-09: Community & events, AI newsletters, tooling & infrastructure, applied visualizations, research & open science, statistical methods & inference, academic research.

The Test Set podcast from Posit is now on YouTube. Here’s the latest episode of The Test Set Podcast, ep. 11: Kelly Bodwin Quarto hacks, AI in the classroom, and why R should stay weird.

R Weekly 2025-W50: New AI Newsletter, Test Set on Youtube, Haskell for Data Science

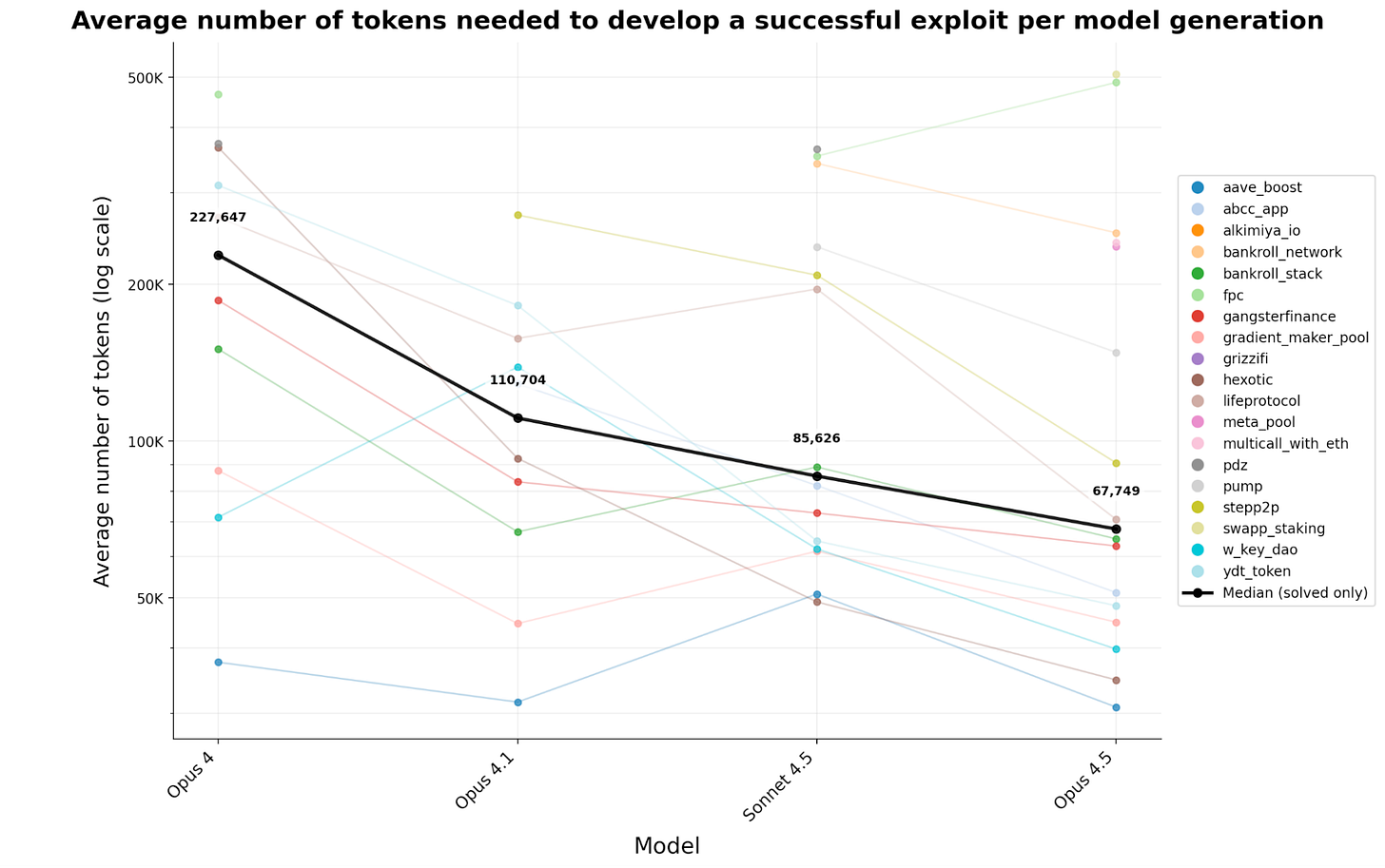

Anthropic Frontier Red Team Blog: AI agents find $4.6M in blockchain smart contract exploits. Anthropic evaluated Al agents’ ability to exploit smart contracts using a new benchmark comprising contracts that were actually exploited. On contracts exploited after the latest knowledge cutoff, Claude Opus 4.5, Claude Sonnet 4.5, and GPT-5 found vulnerabilities worth a combined $4.6 million, a finding that underscores the need for proactive adoption of Al for defense.

OpenAI: People-First AI Fund grantees. The OpenAI Foundation is announcing the first recipients from the People-First AI Fund, a multi-million dollar investment in community-based nonprofits working to strengthen local communities and expand the opportunity of AI.

Anthropic: donating MCP to the Linux Foundation and establishing the Agentic AI Foundation. Anthropic announced that it’s donating the Model Context Protocol (MCP) to the Agentic AI Foundation (AAIF), a directed fund under the Linux Foundation, co-founded by Anthropic, Block and OpenAI, with support from Google, Microsoft, Amazon Web Services (AWS), Cloudflare, and Bloomberg.

Joe Rickert at R Works: October 2025 Top 40 New CRAN Packages. Joe’s picks for the best of the 204 new packages that landed on CRAN in October. Packages are organized into fifteen categories: Data, Decision Analysis, Ecology, Econometrics, Finance, Genomics, Logic, Machine Learning, Mathematics, Medical Statistics, Statistics, Time Series, Utilities, and Visualization.

NYT (gift link): I’m a Professor. A.I. Has Changed My Classroom, but Not for the Worse. “My students’ easy access to chatbots forced me to make humanities instruction even more human.”

Simon Couch & Sara Altman: 2025-12-05 Posit AI Newsletter: model releases (Gemini 3 Pro, GPT 5.1 Pro, Deepseek 3.2, Grok 4.1, Claude Opus 4.5; Posit news, terms (consumer pricing vs API pricing).

Elsevier’s new report: Researcher of the Future: Confidence in Research.

American Science, Shattered: A multipart series at STAT on how the administration has disrupted labs, upended lives, and delayed discoveries.

Nature: China is redrawing the global science map.

Everyone in Seattle Hates AI. “Her PM had been laid off months earlier. The team asked why. Their director told them it was because the PM org ‘wasn’t effective enough at using Copilot 365.’ […] If you could classify your project as ‘AI,’ you were safe and prestigious. If you couldn’t, you were nobody. Overnight, most engineers got rebranded as ‘not AI talent.’”

Abhishaike Mahajan (Owl Posting) interviews Yunha Hwang: We don’t know what most microbial genes do. Can genomic language models help?

RAND Report: A Prisoner’s Dilemma in the Race to Artificial General Intelligence.

UKRI tests AI for grant peer review to tackle surging funding applications. I’m pretty sure this was already happening in stealth anyway.

From OpenRouter: State of AI An Empirical 100 Trillion Token Study with OpenRouter (PDF). Interesting looking at how different models are used for programming versus… ahem... uh, “role play.”

bookdown.org is being decommissioned. Posit recommends moving to Posit Connect Cloud.

NOT-OD-26-009: Updated Terms and Conditions of Award Termination and Compliance with Court Orders.

Effective October 1, 2025, all new NIH Notices of Award will include the following terms: [awards] may also otherwise be terminated […] if the agency determines that the award no longer effectuates the program goals or agency priorities.

Adam Kucharski: Science is screwed. Or is it? We can’t do everything, but we can do things.

EMBL blog: Connecting AI to biology: Model Context Protocol.

Ronald Purser in Current Affairs: AI is Destroying the University and Learning Itself. I liked this essay but I took issue with one of the central theses, writing about it earlier this week:

Finally, a few other papers and preprints that caught my attention this week:

Gene-drive-capable mosquitoes suppress patient-derived malaria in Tanzania

Impact of the 23andMe bankruptcy on preserving the public benefit of scientific data

bronko: ultrafast, alignment-free detection of viral genome variation

PULSAR: a Foundation Model for Multi-scale and Multicellular Biology

eggNOG v7: phylogeny-based orthology predictions and functional annotations

The eXplainable Artificial Intelligence (XAI) Triad: Models, Importances, and Significance at Scale

BioContextAI is a community hub for agentic biomedical systems | Nature Biotechnology