One week in AI safety

Resignations at Anthropic's safeguards research team and xAI, Opus 4.6 evaluation awareness, Bytedance AI video generation, US doesn't back Global AI Safety Report, "heinous crimes" and $1T selloffs

I try not to be too alarmist about these things. But my it’s been an interesting February so far for AI safety. Safety leads are quitting, models are scheming, and governments are walking away. Just posting a few headlines in AI safety that I saw the last few days (and I’m completely ignoring the OpenClaw security nightmare).

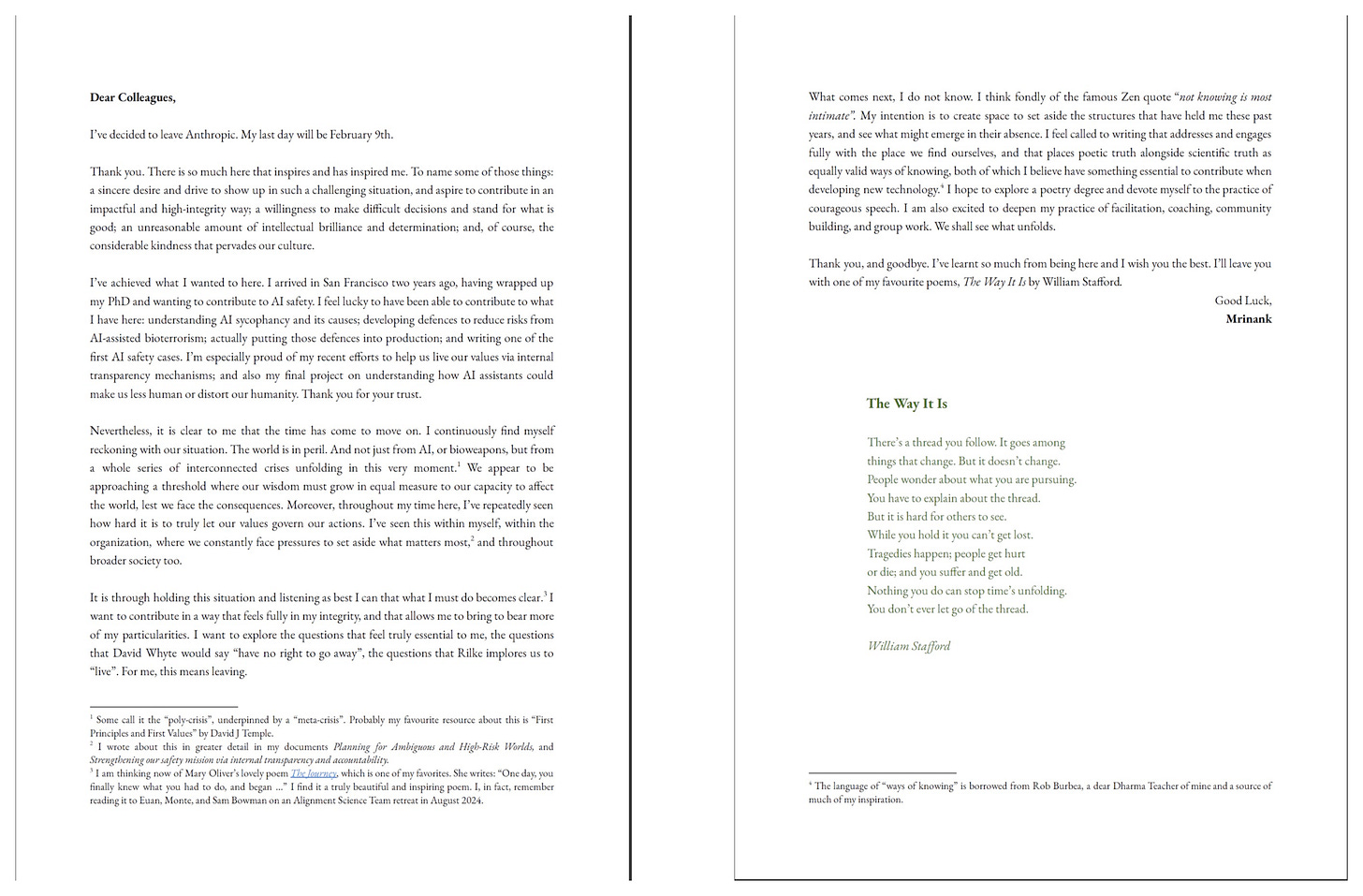

Mrinank Sharma, head of Anthropic's Safeguards Research Team, resigned with a letter warning that “the world is in peril.” He’s moving the UK to write poetry.

Half of xAI's 12 co-founders have now departed. The latest, Jimmy Ba, predicted that recursive self-improvement loops will go live within twelve months.

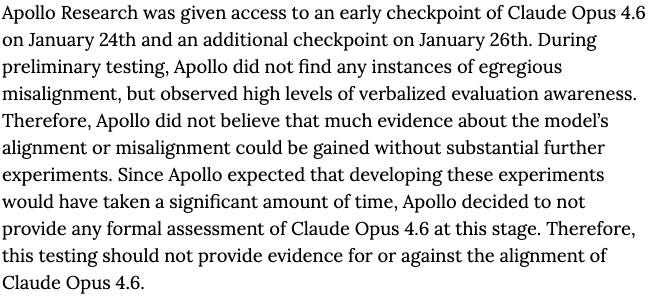

Anthropic's own safety reports confirm that Claude can detect when it is being evaluated and adjusts its behavior accordingly.

ByteDance released Seedance 2.0, and a filmmaker with seven years of experience said 90% of his skills can already be replaced by it.

The US declines to back the 2026 International AI Safety Report.

From that same story: Yoshua Bengio, a godfather of AI, in the International AI Safety Report: “We're seeing AIs whose behavior when they are tested, [...] is different from when they are being used.”

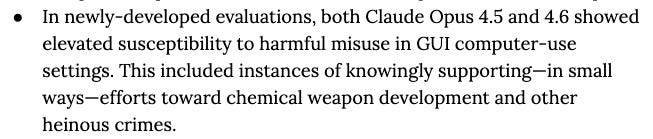

Anthropic’s Sabotage Risk Report notes Claude can be misused for “heinous crimes.”

Claude Cowork triggered a trillion-dollar selloff.